mirror of

https://github.com/kestra-io/kestra.git

synced 2025-12-25 11:12:12 -05:00

Compare commits

17 Commits

global-sta

...

v0.16.2

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

c757827b9d | ||

|

|

2a5c82b2a3 | ||

|

|

15bb0ee65b | ||

|

|

d06e8dad6e | ||

|

|

e9f5752278 | ||

|

|

c16c5ddaf5 | ||

|

|

b706ca1911 | ||

|

|

366246e0a8 | ||

|

|

dcea4551cc | ||

|

|

c0ff6fcc52 | ||

|

|

0525e7eaca | ||

|

|

d1fb098f5b | ||

|

|

9703cc48cb | ||

|

|

31c3e5a4f6 | ||

|

|

bda52eb49d | ||

|

|

8a54b8ec7f | ||

|

|

c34c82c1f9 |

@@ -1,82 +0,0 @@

|

||||

FROM ubuntu:24.04

|

||||

|

||||

ARG BUILDPLATFORM

|

||||

ARG DEBIAN_FRONTEND=noninteractive

|

||||

|

||||

USER root

|

||||

WORKDIR /root

|

||||

|

||||

RUN apt update && apt install -y \

|

||||

apt-transport-https ca-certificates gnupg curl wget git zip unzip less zsh net-tools iputils-ping jq lsof

|

||||

|

||||

ENV HOME="/root"

|

||||

|

||||

# --------------------------------------

|

||||

# Git

|

||||

# --------------------------------------

|

||||

# Need to add the devcontainer workspace folder as a safe directory to enable git

|

||||

# version control system to be enabled in the containers file system.

|

||||

RUN git config --global --add safe.directory "/workspaces/kestra"

|

||||

# --------------------------------------

|

||||

|

||||

# --------------------------------------

|

||||

# Oh my zsh

|

||||

# --------------------------------------

|

||||

RUN sh -c "$(curl -fsSL https://raw.githubusercontent.com/ohmyzsh/ohmyzsh/master/tools/install.sh)" -- \

|

||||

-t robbyrussell \

|

||||

-p git -p node -p npm

|

||||

|

||||

ENV SHELL=/bin/zsh

|

||||

# --------------------------------------

|

||||

|

||||

# --------------------------------------

|

||||

# Java

|

||||

# --------------------------------------

|

||||

ARG OS_ARCHITECTURE

|

||||

|

||||

RUN mkdir -p /usr/java

|

||||

RUN echo "Building on platform: $BUILDPLATFORM"

|

||||

RUN case "$BUILDPLATFORM" in \

|

||||

"linux/amd64") OS_ARCHITECTURE="x64_linux" ;; \

|

||||

"linux/arm64") OS_ARCHITECTURE="aarch64_linux" ;; \

|

||||

"darwin/amd64") OS_ARCHITECTURE="x64_mac" ;; \

|

||||

"darwin/arm64") OS_ARCHITECTURE="aarch64_mac" ;; \

|

||||

*) echo "Unsupported BUILDPLATFORM: $BUILDPLATFORM" && exit 1 ;; \

|

||||

esac && \

|

||||

wget "https://github.com/adoptium/temurin21-binaries/releases/download/jdk-21.0.7%2B6/OpenJDK21U-jdk_${OS_ARCHITECTURE}_hotspot_21.0.7_6.tar.gz" && \

|

||||

mv OpenJDK21U-jdk_${OS_ARCHITECTURE}_hotspot_21.0.7_6.tar.gz openjdk-21.0.7.tar.gz

|

||||

RUN tar -xzvf openjdk-21.0.7.tar.gz && \

|

||||

mv jdk-21.0.7+6 jdk-21 && \

|

||||

mv jdk-21 /usr/java/

|

||||

ENV JAVA_HOME=/usr/java/jdk-21

|

||||

ENV PATH="$PATH:$JAVA_HOME/bin"

|

||||

# Will load a custom configuration file for Micronaut

|

||||

ENV MICRONAUT_ENVIRONMENTS=local,override

|

||||

# Sets the path where you save plugins as Jar and is loaded during the startup process

|

||||

ENV KESTRA_PLUGINS_PATH="/workspaces/kestra/local/plugins"

|

||||

# --------------------------------------

|

||||

|

||||

# --------------------------------------

|

||||

# Node.js

|

||||

# --------------------------------------

|

||||

RUN curl -fsSL https://deb.nodesource.com/setup_22.x -o nodesource_setup.sh \

|

||||

&& bash nodesource_setup.sh && apt install -y nodejs

|

||||

# Increases JavaScript heap memory to 4GB to prevent heap out of error during startup

|

||||

ENV NODE_OPTIONS=--max-old-space-size=4096

|

||||

# --------------------------------------

|

||||

|

||||

# --------------------------------------

|

||||

# Python

|

||||

# --------------------------------------

|

||||

RUN apt install -y python3 pip python3-venv

|

||||

# --------------------------------------

|

||||

|

||||

# --------------------------------------

|

||||

# SSH

|

||||

# --------------------------------------

|

||||

RUN mkdir -p ~/.ssh

|

||||

RUN touch ~/.ssh/config

|

||||

RUN echo "Host github.com" >> ~/.ssh/config \

|

||||

&& echo " IdentityFile ~/.ssh/id_ed25519" >> ~/.ssh/config

|

||||

RUN touch ~/.ssh/id_ed25519

|

||||

# --------------------------------------

|

||||

@@ -1,151 +0,0 @@

|

||||

# Kestra Devcontainer

|

||||

|

||||

This devcontainer provides a quick and easy setup for anyone using VSCode to get up and running quickly with this project to start development on either the frontend or backend. It bootstraps a docker container for you to develop inside of without the need to manually setup the environment.

|

||||

|

||||

---

|

||||

|

||||

## INSTRUCTIONS

|

||||

|

||||

### Setup:

|

||||

|

||||

Take a look at this guide to get an idea of what the setup is like as this devcontainer setup follows this approach: https://kestra.io/docs/getting-started/contributing

|

||||

|

||||

Once you have this repo cloned to your local system, you will need to install the VSCode extension [Remote Development](https://marketplace.visualstudio.com/items?itemName=ms-vscode-remote.vscode-remote-extensionpack).

|

||||

|

||||

Then run the following command from the command palette:

|

||||

`Dev Containers: Open Folder in Container...` and select your Kestra root folder.

|

||||

|

||||

This will then put you inside a docker container ready for development.

|

||||

|

||||

NOTE: you'll need to wait for the gradle build to finish and compile Java files but this process should happen automatically within VSCode.

|

||||

|

||||

In the meantime, you can move onto the next step...

|

||||

|

||||

---

|

||||

|

||||

### Requirements

|

||||

|

||||

- Java 21 (LTS versions).

|

||||

> ⚠️ Java 24 and above are not supported yet and will fail with `invalid source release: 21`.

|

||||

- Gradle (comes with wrapper `./gradlew`)

|

||||

- Docker (optional, for running Kestra in containers)

|

||||

|

||||

### Development:

|

||||

|

||||

- (Optional) By default, your dev server will target `localhost:8080`. If your backend is running elsewhere, you can create `.env.development.local` under `ui` folder with this content:

|

||||

```

|

||||

VITE_APP_API_URL={myApiUrl}

|

||||

```

|

||||

|

||||

- Navigate into the `ui` folder and run `npm install` to install the dependencies for the frontend project.

|

||||

|

||||

- Now go to the `cli/src/main/resources` folder and create a `application-override.yml` file.

|

||||

|

||||

Now you have two choices:

|

||||

|

||||

`Local mode`:

|

||||

|

||||

Runs the Kestra server in local mode which uses a H2 database, so this is the only config you'd need:

|

||||

|

||||

```yaml

|

||||

micronaut:

|

||||

server:

|

||||

cors:

|

||||

enabled: true

|

||||

configurations:

|

||||

all:

|

||||

allowedOrigins:

|

||||

- http://localhost:5173

|

||||

```

|

||||

|

||||

You can then open a new terminal and run the following command to start the backend server: `./gradlew runLocal`

|

||||

|

||||

`Standalone mode`:

|

||||

|

||||

Runs in standalone mode which uses Postgres. Make sure to have a local Postgres instance already running on localhost:

|

||||

|

||||

```yaml

|

||||

kestra:

|

||||

repository:

|

||||

type: postgres

|

||||

storage:

|

||||

type: local

|

||||

local:

|

||||

base-path: "/app/storage"

|

||||

queue:

|

||||

type: postgres

|

||||

tasks:

|

||||

tmp-dir:

|

||||

path: /tmp/kestra-wd/tmp

|

||||

anonymous-usage-report:

|

||||

enabled: false

|

||||

|

||||

datasources:

|

||||

postgres:

|

||||

# It is important to note that you must use the "host.docker.internal" host when connecting to a docker container outside of your devcontainer as attempting to use localhost will only point back to this devcontainer.

|

||||

url: jdbc:postgresql://host.docker.internal:5432/kestra

|

||||

driverClassName: org.postgresql.Driver

|

||||

username: kestra

|

||||

password: k3str4

|

||||

|

||||

flyway:

|

||||

datasources:

|

||||

postgres:

|

||||

enabled: true

|

||||

locations:

|

||||

- classpath:migrations/postgres

|

||||

# We must ignore missing migrations as we may delete the wrong ones or delete those that are not used anymore.

|

||||

ignore-migration-patterns: "*:missing,*:future"

|

||||

out-of-order: true

|

||||

|

||||

micronaut:

|

||||

server:

|

||||

cors:

|

||||

enabled: true

|

||||

configurations:

|

||||

all:

|

||||

allowedOrigins:

|

||||

- http://localhost:5173

|

||||

```

|

||||

|

||||

Then add the following settings to the `.vscode/launch.json` file:

|

||||

|

||||

```json

|

||||

{

|

||||

"version": "0.2.0",

|

||||

"configurations": [

|

||||

{

|

||||

"type": "java",

|

||||

"name": "Kestra Standalone",

|

||||

"request": "launch",

|

||||

"mainClass": "io.kestra.cli.App",

|

||||

"projectName": "cli",

|

||||

"args": "server standalone"

|

||||

}

|

||||

]

|

||||

}

|

||||

```

|

||||

|

||||

You can then use the VSCode `Run and Debug` extension to start the Kestra server.

|

||||

|

||||

Additionally, if you're doing frontend development, you can run `npm run dev` from the `ui` folder after having the above running (which will provide a backend) to access your application from `localhost:5173`. This has the benefit to watch your changes and hot-reload upon doing frontend changes.

|

||||

|

||||

#### Plugins

|

||||

If you want your plugins to be loaded inside your devcontainer, point the `source` field to a folder containing jars of the plugins you want to embed in the following snippet in `devcontainer.json`:

|

||||

```

|

||||

"mounts": [

|

||||

{

|

||||

"source": "/absolute/path/to/your/local/jar/plugins/folder",

|

||||

"target": "/workspaces/kestra/local/plugins",

|

||||

"type": "bind"

|

||||

}

|

||||

],

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

### GIT

|

||||

|

||||

If you want to commit to GitHub, make sure to navigate to the `~/.ssh` folder and either create a new SSH key or override the existing `id_ed25519` file and paste an existing SSH key from your local machine into this file. You will then need to change the permissions of the file by running: `chmod 600 id_ed25519`. This will allow you to then push to GitHub.

|

||||

|

||||

---

|

||||

@@ -1,46 +0,0 @@

|

||||

{

|

||||

"name": "kestra",

|

||||

"build": {

|

||||

"context": ".",

|

||||

"dockerfile": "Dockerfile"

|

||||

},

|

||||

"workspaceFolder": "/workspaces/kestra",

|

||||

"forwardPorts": [5173, 8080],

|

||||

"customizations": {

|

||||

"vscode": {

|

||||

"settings": {

|

||||

"terminal.integrated.profiles.linux": {

|

||||

"zsh": {

|

||||

"path": "/bin/zsh"

|

||||

}

|

||||

},

|

||||

"workbench.iconTheme": "vscode-icons",

|

||||

"editor.tabSize": 4,

|

||||

"editor.formatOnSave": true,

|

||||

"files.insertFinalNewline": true,

|

||||

"editor.defaultFormatter": "esbenp.prettier-vscode",

|

||||

"telemetry.telemetryLevel": "off",

|

||||

"editor.bracketPairColorization.enabled": true,

|

||||

"editor.guides.bracketPairs": "active"

|

||||

},

|

||||

"extensions": [

|

||||

"redhat.vscode-yaml",

|

||||

"dbaeumer.vscode-eslint",

|

||||

"vscode-icons-team.vscode-icons",

|

||||

"eamodio.gitlens",

|

||||

"esbenp.prettier-vscode",

|

||||

"aaron-bond.better-comments",

|

||||

"codeandstuff.package-json-upgrade",

|

||||

"andys8.jest-snippets",

|

||||

"oderwat.indent-rainbow",

|

||||

"evondev.indent-rainbow-palettes",

|

||||

"formulahendry.auto-rename-tag",

|

||||

"IronGeek.vscode-env",

|

||||

"yoavbls.pretty-ts-errors",

|

||||

"github.vscode-github-actions",

|

||||

"vscjava.vscode-java-pack",

|

||||

"docker.docker"

|

||||

]

|

||||

}

|

||||

}

|

||||

}

|

||||

46

.github/CONTRIBUTING.md

vendored

46

.github/CONTRIBUTING.md

vendored

@@ -1,7 +1,7 @@

|

||||

## Code of Conduct

|

||||

|

||||

This project and everyone participating in it is governed by the

|

||||

[Kestra Code of Conduct](https://github.com/kestra-io/kestra/blob/develop/.github/CODE_OF_CONDUCT.md).

|

||||

[Kestra Code of Conduct](https://github.com/kestra-io/kestrablob/master/CODE_OF_CONDUCT.md).

|

||||

By participating, you are expected to uphold this code. Please report unacceptable behavior

|

||||

to <hello@kestra.io>.

|

||||

|

||||

@@ -31,16 +31,12 @@ Watch out for duplicates! If you are creating a new issue, please check existing

|

||||

|

||||

#### Requirements

|

||||

The following dependencies are required to build Kestra locally:

|

||||

- Java 21+

|

||||

- Node 18+ and npm

|

||||

- Java 17+, Kestra runs on Java 11 but we hit a Java compiler bug fixed in Java 17

|

||||

- Node 14+ and npm

|

||||

- Python 3, pip and python venv

|

||||

- Docker & Docker Compose

|

||||

- an IDE (Intellij IDEA, Eclipse or VS Code)

|

||||

|

||||

Thanks to the Kestra community, if using VSCode, you can also start development on either the frontend or backend with a bootstrapped docker container without the need to manually set up the environment.

|

||||

|

||||

Check out the [README](../.devcontainer/README.md) for set-up instructions and the associated [Dockerfile](../.devcontainer/Dockerfile) in the respository to get started.

|

||||

|

||||

To start contributing:

|

||||

- [Fork](https://docs.github.com/en/github/getting-started-with-github/fork-a-repo) the repository

|

||||

- Clone the fork on your workstation:

|

||||

@@ -50,23 +46,20 @@ git clone git@github.com:{YOUR_USERNAME}/kestra.git

|

||||

cd kestra

|

||||

```

|

||||

|

||||

#### Develop on the backend

|

||||

#### Develop backend

|

||||

The backend is made with [Micronaut](https://micronaut.io).

|

||||

|

||||

Open the cloned repository in your favorite IDE. In most of decent IDEs, Gradle build will be detected and all dependencies will be downloaded.

|

||||

You can also build it from a terminal using `./gradlew build`, the Gradle wrapper will download the right Gradle version to use.

|

||||

|

||||

- You may need to enable java annotation processors since we are using them.

|

||||

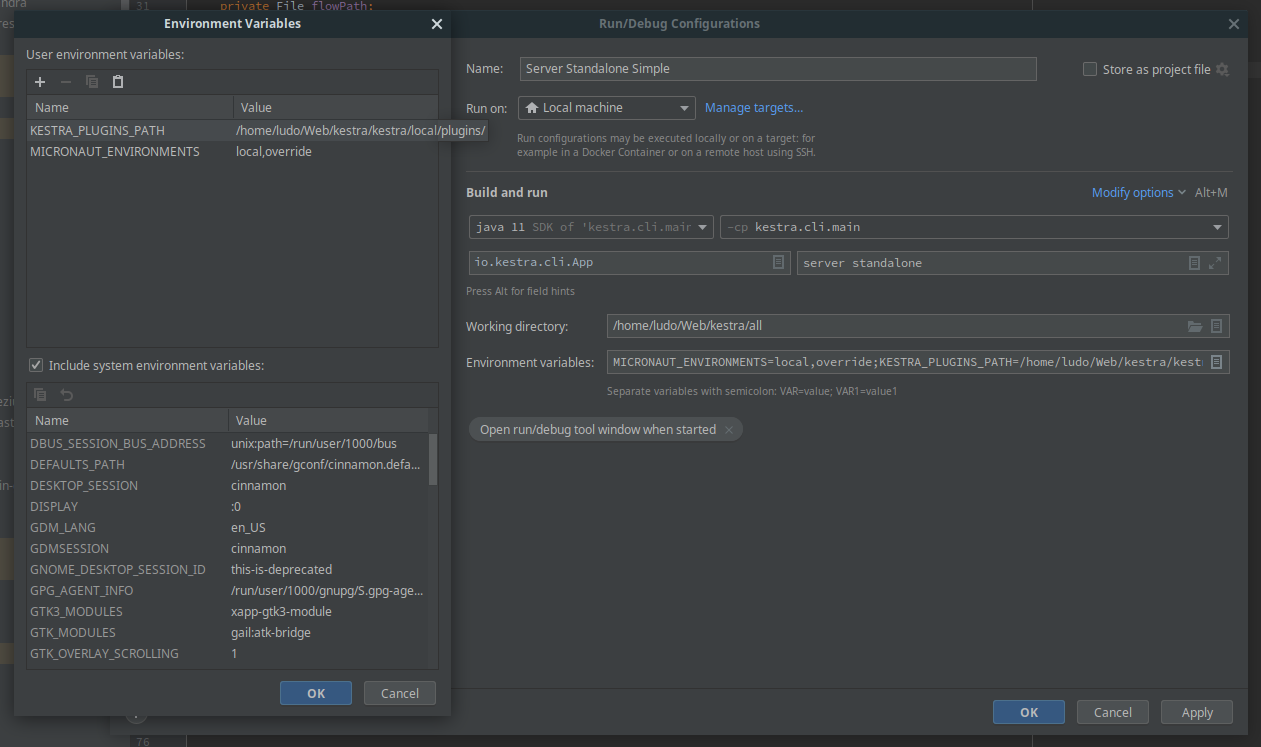

- On IntelliJ IDEA, click on **Run -> Edit Configurations -> + Add new Configuration** to create a run configuration to start Kestra.

|

||||

- The main class is `io.kestra.cli.App` from module `kestra.cli.main`.

|

||||

- Pass as program arguments the server you want to work with, for example `server local` will start the [standalone local](https://kestra.io/docs/administrator-guide/server-cli#kestra-local-development-server-with-no-dependencies). You can also use `server standalone` and use the provided `docker-compose-ci.yml` Docker compose file to start a standalone server with a real database as a backend that would need to be configured properly.

|

||||

- Configure the following environment variables:

|

||||

- `MICRONAUT_ENVIRONMENTS`: can be set to any string and will load a custom configuration file in `cli/src/main/resources/application-{env}.yml`.

|

||||

- `KESTRA_PLUGINS_PATH`: is the path where you will save plugins as Jar and will be load on startup.

|

||||

- See the screenshot below for an example:

|

||||

- If you encounter **JavaScript memory heap out** error during startup, configure `NODE_OPTIONS` environment variable with some large value.

|

||||

- Example `NODE_OPTIONS: --max-old-space-size=4096` or `NODE_OPTIONS: --max-old-space-size=8192`

|

||||

- The server starts by default on port 8080 and is reachable on `http://localhost:8080`

|

||||

- You may need to enable java annotation processors since we are using it a lot.

|

||||

- The main class is `io.kestra.cli.App` from module `kestra.cli.main`

|

||||

- Pass as program arguments the server you want to develop, for example `server local` will start the [standalone local](https://kestra.io/docs/administrator-guide/server-cli#kestra-local-development-server-with-no-dependencies)

|

||||

-  Intellij Idea configuration can be found in screenshot below.

|

||||

- `MICRONAUT_ENVIRONMENTS`: can be set any string and will load a custom configuration file in `cli/src/main/resources/application-{env}.yml`

|

||||

- `KESTRA_PLUGINS_PATH`: is the path where you will save plugins as Jar and will be load on the startup.

|

||||

- You can also use the gradle task `./gradlew runLocal` that will run a standalone server with `MICRONAUT_ENVIRONMENTS=override` and plugins path `local/plugins`

|

||||

- The server start by default on port 8080 and is reachable on `http://localhost:8080`

|

||||

|

||||

If you want to launch all tests, you need Python and some packages installed on your machine, on Ubuntu you can install them with:

|

||||

|

||||

@@ -76,20 +69,21 @@ python3 -m pip install virtualenv

|

||||

```

|

||||

|

||||

|

||||

#### Develop on the frontend

|

||||

#### Develop frontend

|

||||

The frontend is made with [Vue.js](https://vuejs.org/) and located on the `/ui` folder.

|

||||

|

||||

- `npm install`

|

||||

- `npm install --force` (force is need because of some conflicting package)

|

||||

- create a files `ui/.env.development.local` with content `VITE_APP_API_URL=http://localhost:8080` (or your actual server url)

|

||||

- `npm run dev` will start the development server with hot reload.

|

||||

- The server start by default on port 5173 and is reachable on `http://localhost:5173`

|

||||

- The server start by default on port 8090 and is reachable on `http://localhost:5173`

|

||||

- You can run `npm run build` in order to build the front-end that will be delivered from the backend (without running the `npm run dev`) above.

|

||||

|

||||

Now, you need to start a backend server, you could:

|

||||

- start a [local server](https://kestra.io/docs/administrator-guide/server-cli#kestra-local-development-server-with-no-dependencies) without a database using this docker-compose file already configured with CORS enabled:

|

||||

- start a [local server](https://kestra.io/docs/administrator-guide/server-cli#kestra-local-development-server-with-no-dependencies) without database using this docker-compose file already configured with CORS enabled:

|

||||

```yaml

|

||||

services:

|

||||

kestra:

|

||||

image: kestra/kestra:latest

|

||||

image: kestra/kestra:latest-full

|

||||

user: "root"

|

||||

command: server local

|

||||

environment:

|

||||

@@ -105,7 +99,7 @@ services:

|

||||

ports:

|

||||

- "8080:8080"

|

||||

```

|

||||

- start the [Develop backend](#develop-backend) from your IDE, you need to configure CORS restrictions when using the local development npm server, changing the backend configuration allowing the http://localhost:5173 origin in `cli/src/main/resources/application-override.yml`

|

||||

- start the [Develop backend](#develop-backend) from your IDE and you need to configure CORS restrictions when using the local development npm server, changing the backend configuration allowing the http://localhost:5173 origin in `cli/src/main/resources/application-override.yml`

|

||||

|

||||

```yaml

|

||||

micronaut:

|

||||

@@ -139,4 +133,4 @@ A complete documentation for developing plugin can be found [here](https://kestr

|

||||

|

||||

### Improving The Documentation

|

||||

The main documentation is located in a separate [repository](https://github.com/kestra-io/kestra.io).

|

||||

For tasks documentation, they are located directly in the Java source, using [Swagger annotations](https://github.com/swagger-api/swagger-core/wiki/Swagger-2.X---Annotations) (Example: [for Bash tasks](https://github.com/kestra-io/kestra/blob/develop/core/src/main/java/io/kestra/core/tasks/scripts/AbstractBash.java))

|

||||

For tasks documentation, they are located directly on Java source using [Swagger annotations](https://github.com/swagger-api/swagger-core/wiki/Swagger-2.X---Annotations) (Example: [for Bash tasks](https://github.com/kestra-io/kestra/blob/develop/core/src/main/java/io/kestra/core/tasks/scripts/AbstractBash.java))

|

||||

|

||||

54

.github/ISSUE_TEMPLATE/blueprint.yml

vendored

Normal file

54

.github/ISSUE_TEMPLATE/blueprint.yml

vendored

Normal file

@@ -0,0 +1,54 @@

|

||||

name: Blueprint

|

||||

description: Add a new blueprint

|

||||

|

||||

body:

|

||||

- type: markdown

|

||||

attributes:

|

||||

value: |

|

||||

Please fill out all the fields listed below. This will help us review and add your blueprint faster.

|

||||

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Blueprint title

|

||||

description: A title briefly describing what the blueprint does, ideally in a verb phrase + noun format.

|

||||

placeholder: E.g. "Upload a file to service X, then run Y and Z"

|

||||

validations:

|

||||

required: true

|

||||

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Source code

|

||||

description: Flow code that will appear on the Blueprint page.

|

||||

placeholder: |

|

||||

```yaml

|

||||

id: yourFlowId

|

||||

namespace: blueprint

|

||||

tasks:

|

||||

- id: taskName

|

||||

type: task_type

|

||||

```

|

||||

validations:

|

||||

required: true

|

||||

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: About this blueprint

|

||||

description: "A concise markdown documentation about the blueprint's configuration and usage."

|

||||

placeholder: |

|

||||

E.g. "This flow downloads a file and uploads it to an S3 bucket. This flow assumes AWS credentials stored as environment variables `AWS_ACCESS_KEY_ID` and `AWS_SECRET_ACCESS_KEY`."

|

||||

validations:

|

||||

required: false

|

||||

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Tags (optional)

|

||||

description: Blueprint categories such as Ingest, Transform, Analyze, Python, Docker, AWS, GCP, Azure, etc.

|

||||

placeholder: |

|

||||

- Ingest

|

||||

- Transform

|

||||

- AWS

|

||||

validations:

|

||||

required: false

|

||||

|

||||

labels:

|

||||

- blueprint

|

||||

12

.github/ISSUE_TEMPLATE/bug.yml

vendored

12

.github/ISSUE_TEMPLATE/bug.yml

vendored

@@ -4,7 +4,9 @@ body:

|

||||

- type: markdown

|

||||

attributes:

|

||||

value: |

|

||||

Thanks for reporting an issue! Please provide a [Minimal Reproducible Example](https://stackoverflow.com/help/minimal-reproducible-example) and share any additional information that may help reproduce, troubleshoot, and hopefully fix the issue, including screenshots, error traceback, and your Kestra server logs. For quick questions, you can contact us directly on [Slack](https://kestra.io/slack).

|

||||

Thanks for reporting an issue! Please provide a [Minima Reproducible Example](https://stackoverflow.com/help/minimal-reproducible-example)

|

||||

and share any additional information that may help reproduce, troubleshoot, and hopefully fix the issue, including screenshots, error traceback, and your Kestra server logs.

|

||||

NOTE: If your issue is more of a question, please ping us directly on [Slack](https://kestra.io/slack).

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Describe the issue

|

||||

@@ -17,10 +19,10 @@ body:

|

||||

label: Environment

|

||||

description: Environment information where the problem occurs.

|

||||

value: |

|

||||

- Kestra Version: develop

|

||||

- Kestra Version:

|

||||

- Operating System (OS/Docker/Kubernetes):

|

||||

- Java Version (if you don't run kestra in Docker):

|

||||

validations:

|

||||

required: false

|

||||

labels:

|

||||

- bug

|

||||

- area/backend

|

||||

- area/frontend

|

||||

- bug

|

||||

3

.github/ISSUE_TEMPLATE/config.yml

vendored

3

.github/ISSUE_TEMPLATE/config.yml

vendored

@@ -1,4 +1,7 @@

|

||||

contact_links:

|

||||

- name: GitHub Discussions

|

||||

url: https://github.com/kestra-io/kestra/discussions

|

||||

about: Ask questions about Kestra on Github

|

||||

- name: Chat

|

||||

url: https://kestra.io/slack

|

||||

about: Chat with us on Slack.

|

||||

8

.github/ISSUE_TEMPLATE/feature.yml

vendored

8

.github/ISSUE_TEMPLATE/feature.yml

vendored

@@ -1,13 +1,15 @@

|

||||

name: Feature request

|

||||

description: Create a new feature request

|

||||

body:

|

||||

- type: markdown

|

||||

attributes:

|

||||

value: |

|

||||

Please describe the feature you want for Kestra to implement, before that check if there is already an existing issue to add it.

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Feature description

|

||||

placeholder: Tell us more about your feature request

|

||||

placeholder: Tell us what feature you would like for Kestra to have and what problem is it going to solve

|

||||

validations:

|

||||

required: true

|

||||

labels:

|

||||

- enhancement

|

||||

- area/backend

|

||||

- area/frontend

|

||||

|

||||

8

.github/ISSUE_TEMPLATE/other.yml

vendored

Normal file

8

.github/ISSUE_TEMPLATE/other.yml

vendored

Normal file

@@ -0,0 +1,8 @@

|

||||

name: Other

|

||||

description: Something different

|

||||

body:

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Issue description

|

||||

validations:

|

||||

required: true

|

||||

29

.github/actions/plugins-list/action.yml

vendored

29

.github/actions/plugins-list/action.yml

vendored

@@ -1,29 +0,0 @@

|

||||

name: 'Load Kestra Plugin List'

|

||||

description: 'Composite action to load list of plugins'

|

||||

inputs:

|

||||

plugin-version:

|

||||

description: "Kestra version"

|

||||

default: 'LATEST'

|

||||

required: true

|

||||

plugin-file:

|

||||

description: "File of the plugins"

|

||||

default: './.plugins'

|

||||

required: true

|

||||

outputs:

|

||||

plugins:

|

||||

description: "List of all Kestra plugins"

|

||||

value: ${{ steps.plugins.outputs.plugins }}

|

||||

repositories:

|

||||

description: "List of all Kestra repositories of plugins"

|

||||

value: ${{ steps.plugins.outputs.repositories }}

|

||||

runs:

|

||||

using: composite

|

||||

steps:

|

||||

- name: Get Plugins List

|

||||

id: plugins

|

||||

shell: bash

|

||||

run: |

|

||||

PLUGINS=$([ -f ${{ inputs.plugin-file }} ] && cat ${{ inputs.plugin-file }} | grep "io\\.kestra\\." | sed -e '/#/s/^.//' | sed -e "s/LATEST/${{ inputs.plugin-version }}/g" | cut -d':' -f2- | xargs || echo '');

|

||||

REPOSITORIES=$([ -f ${{ inputs.plugin-file }} ] && cat ${{ inputs.plugin-file }} | grep "io\\.kestra\\." | sed -e '/#/s/^.//' | cut -d':' -f1 | uniq | sort | xargs || echo '')

|

||||

echo "plugins=$PLUGINS" >> $GITHUB_OUTPUT

|

||||

echo "repositories=$REPOSITORIES" >> $GITHUB_OUTPUT

|

||||

20

.github/actions/setup-vars/action.yml

vendored

20

.github/actions/setup-vars/action.yml

vendored

@@ -1,20 +0,0 @@

|

||||

name: 'Setup vars'

|

||||

description: 'Composite action to setup common vars'

|

||||

outputs:

|

||||

tag:

|

||||

description: "Git tag"

|

||||

value: ${{ steps.vars.outputs.tag }}

|

||||

commit:

|

||||

description: "Git commit"

|

||||

value: ${{ steps.vars.outputs.commit }}

|

||||

runs:

|

||||

using: composite

|

||||

steps:

|

||||

# Setup vars

|

||||

- name: Set variables

|

||||

id: vars

|

||||

shell: bash

|

||||

run: |

|

||||

TAG=${GITHUB_REF#refs/*/}

|

||||

echo "tag=${TAG}" >> $GITHUB_OUTPUT

|

||||

echo "commit=$(git rev-parse --short "$GITHUB_SHA")" >> $GITHUB_OUTPUT

|

||||

31

.github/dependabot.yml

vendored

31

.github/dependabot.yml

vendored

@@ -1,50 +1,33 @@

|

||||

# See GitHub's docs for more information on this file:

|

||||

# https://docs.github.com/en/free-pro-team@latest/github/administering-a-repository/configuration-options-for-dependency-updates

|

||||

|

||||

version: 2

|

||||

updates:

|

||||

# Maintain dependencies for GitHub Actions

|

||||

- package-ecosystem: "github-actions"

|

||||

directory: "/"

|

||||

schedule:

|

||||

# Check for updates to GitHub Actions every week

|

||||

interval: "weekly"

|

||||

day: "wednesday"

|

||||

time: "08:00"

|

||||

timezone: "Europe/Paris"

|

||||

open-pull-requests-limit: 50

|

||||

labels:

|

||||

- "dependency-upgrade"

|

||||

open-pull-requests-limit: 50

|

||||

|

||||

# Maintain dependencies for Gradle modules

|

||||

- package-ecosystem: "gradle"

|

||||

directory: "/"

|

||||

schedule:

|

||||

# Check for updates to Gradle modules every week

|

||||

interval: "weekly"

|

||||

day: "wednesday"

|

||||

time: "08:00"

|

||||

timezone: "Europe/Paris"

|

||||

open-pull-requests-limit: 50

|

||||

labels:

|

||||

- "dependency-upgrade"

|

||||

open-pull-requests-limit: 50

|

||||

|

||||

# Maintain dependencies for NPM modules

|

||||

# Maintain dependencies for Npm modules

|

||||

- package-ecosystem: "npm"

|

||||

directory: "/ui"

|

||||

schedule:

|

||||

# Check for updates to Npm modules every week

|

||||

interval: "weekly"

|

||||

day: "wednesday"

|

||||

time: "08:00"

|

||||

timezone: "Europe/Paris"

|

||||

open-pull-requests-limit: 50

|

||||

labels:

|

||||

- "dependency-upgrade"

|

||||

ignore:

|

||||

# Ignore updates of version 1.x, as we're using the beta of 2.x (still in beta)

|

||||

- dependency-name: "vue-virtual-scroller"

|

||||

versions:

|

||||

- "1.x"

|

||||

|

||||

# Ignore updates to monaco-yaml, version is pinned to 5.3.1 due to patch-package script additions

|

||||

- dependency-name: "monaco-yaml"

|

||||

versions:

|

||||

- ">=5.3.2"

|

||||

open-pull-requests-limit: 50

|

||||

|

||||

BIN

.github/node_option_env_var.png

vendored

BIN

.github/node_option_env_var.png

vendored

Binary file not shown.

|

Before Width: | Height: | Size: 130 KiB |

5

.github/pull_request_template.md

vendored

5

.github/pull_request_template.md

vendored

@@ -1,13 +1,12 @@

|

||||

<!-- Thanks for submitting a Pull Request to Kestra. To help us review your contribution, please follow the guidelines below:

|

||||

<!-- Thanks for submitting a Pull Request to kestra. To help us review your contribution, please follow the guidelines below:

|

||||

|

||||

- Make sure that your commits follow the [conventional commits](https://www.conventionalcommits.org/en/v1.0.0/) specification e.g. `feat(ui): add a new navigation menu item` or `fix(core): fix a bug in the core model` or `docs: update the README.md`. This will help us automatically generate the changelog.

|

||||

- The title should briefly summarize the proposed changes.

|

||||

- Provide a short overview of the change and the value it adds.

|

||||

- Share a flow example to help the reviewer understand and QA the change.

|

||||

- Use "closes" to automatically close an issue. For example, `closes #1234` will close issue #1234. -->

|

||||

- Use "close" to automatically close an issue. For example, `close #1234` will close issue #1234. -->

|

||||

|

||||

### What changes are being made and why?

|

||||

|

||||

<!-- Please include a brief summary of the changes included in this PR e.g. closes #1234. -->

|

||||

|

||||

---

|

||||

|

||||

BIN

.github/run-app.png

vendored

BIN

.github/run-app.png

vendored

Binary file not shown.

|

Before Width: | Height: | Size: 210 KiB |

67

.github/workflows/auto-translate-ui-keys.yml

vendored

67

.github/workflows/auto-translate-ui-keys.yml

vendored

@@ -1,67 +0,0 @@

|

||||

name: Auto-Translate UI keys and create PR

|

||||

|

||||

on:

|

||||

schedule:

|

||||

- cron: "0 9-21/3 * * *" # Every 3 hours from 9 AM to 9 PM

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

retranslate_modified_keys:

|

||||

description: "Whether to re-translate modified keys even if they already have translations."

|

||||

type: choice

|

||||

options:

|

||||

- "false"

|

||||

- "true"

|

||||

default: "false"

|

||||

required: false

|

||||

|

||||

jobs:

|

||||

translations:

|

||||

name: Translations

|

||||

runs-on: ubuntu-latest

|

||||

timeout-minutes: 10

|

||||

steps:

|

||||

- uses: actions/checkout@v5

|

||||

name: Checkout

|

||||

with:

|

||||

fetch-depth: 0

|

||||

|

||||

- name: Set up Python

|

||||

uses: actions/setup-python@v6

|

||||

with:

|

||||

python-version: "3.x"

|

||||

|

||||

- name: Install Python dependencies

|

||||

run: pip install gitpython openai

|

||||

|

||||

- name: Generate translations

|

||||

run: python ui/src/translations/generate_translations.py ${{ github.event.inputs.retranslate_modified_keys }}

|

||||

env:

|

||||

OPENAI_API_KEY: ${{ secrets.OPENAI_API_KEY }}

|

||||

|

||||

- name: Set up Node

|

||||

uses: actions/setup-node@v5

|

||||

with:

|

||||

node-version: "20.x"

|

||||

|

||||

- name: Set up Git

|

||||

run: |

|

||||

git config --global user.name "GitHub Action"

|

||||

git config --global user.email "actions@github.com"

|

||||

|

||||

- name: Commit and create PR

|

||||

env:

|

||||

GH_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

run: |

|

||||

BRANCH_NAME="chore/update-translations-$(date +%s)"

|

||||

git checkout -b $BRANCH_NAME

|

||||

git add ui/src/translations/*.json

|

||||

if git diff --cached --quiet; then

|

||||

echo "No changes to commit. Exiting with success."

|

||||

exit 0

|

||||

fi

|

||||

git commit -m "chore(core): localize to languages other than english" -m "Extended localization support by adding translations for multiple languages using English as the base. This enhances accessibility and usability for non-English-speaking users while keeping English as the source reference."

|

||||

git push -u origin $BRANCH_NAME || (git push origin --delete $BRANCH_NAME && git push -u origin $BRANCH_NAME)

|

||||

gh pr create --title "Translations from en.json" --body $'This PR was created automatically by a GitHub Action.\n\nSomeone from the @kestra-io/frontend team needs to review and merge.' --base ${{ github.ref_name }} --head $BRANCH_NAME

|

||||

|

||||

- name: Check keys matching

|

||||

run: node ui/src/translations/check.js

|

||||

20

.github/workflows/codeql-analysis.yml

vendored

20

.github/workflows/codeql-analysis.yml

vendored

@@ -6,11 +6,11 @@

|

||||

name: "CodeQL"

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [develop]

|

||||

schedule:

|

||||

- cron: '0 5 * * 1'

|

||||

|

||||

workflow_dispatch: {}

|

||||

|

||||

jobs:

|

||||

analyze:

|

||||

name: Analyze

|

||||

@@ -27,7 +27,7 @@ jobs:

|

||||

|

||||

steps:

|

||||

- name: Checkout repository

|

||||

uses: actions/checkout@v5

|

||||

uses: actions/checkout@v4

|

||||

with:

|

||||

# We must fetch at least the immediate parents so that if this is

|

||||

# a pull request then we can checkout the head.

|

||||

@@ -50,24 +50,14 @@ jobs:

|

||||

|

||||

# Set up JDK

|

||||

- name: Set up JDK

|

||||

uses: actions/setup-java@v5

|

||||

if: ${{ matrix.language == 'java' }}

|

||||

uses: actions/setup-java@v4

|

||||

with:

|

||||

distribution: 'temurin'

|

||||

java-version: 21

|

||||

|

||||

- name: Setup gradle

|

||||

if: ${{ matrix.language == 'java' }}

|

||||

uses: gradle/actions/setup-gradle@v4

|

||||

|

||||

- name: Build with Gradle

|

||||

if: ${{ matrix.language == 'java' }}

|

||||

run: ./gradlew testClasses -x :ui:assembleFrontend

|

||||

java-version: 17

|

||||

|

||||

# Autobuild attempts to build any compiled languages (C/C++, C#, or Java).

|

||||

# If this step fails, then you should remove it and run the build manually (see below)

|

||||

- name: Autobuild

|

||||

if: ${{ matrix.language != 'java' }}

|

||||

uses: github/codeql-action/autobuild@v3

|

||||

|

||||

# ℹ️ Command-line programs to run using the OS shell.

|

||||

|

||||

86

.github/workflows/e2e.yml

vendored

86

.github/workflows/e2e.yml

vendored

@@ -1,86 +0,0 @@

|

||||

name: 'E2E tests revival'

|

||||

description: 'New E2E tests implementation started by Roman. Based on playwright in npm UI project, tests Kestra OSS develop docker image. These tests are written from zero, lets make them unflaky from the start!.'

|

||||

on:

|

||||

schedule:

|

||||

- cron: "0 * * * *" # Every hour

|

||||

workflow_call:

|

||||

inputs:

|

||||

noInputYet:

|

||||

description: 'not input yet.'

|

||||

required: false

|

||||

type: string

|

||||

default: "no input"

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

noInputYet:

|

||||

description: 'not input yet.'

|

||||

required: false

|

||||

type: string

|

||||

default: "no input"

|

||||

jobs:

|

||||

check:

|

||||

timeout-minutes: 15

|

||||

runs-on: ubuntu-latest

|

||||

env:

|

||||

GOOGLE_SERVICE_ACCOUNT: ${{ secrets.GOOGLE_SERVICE_ACCOUNT }}

|

||||

steps:

|

||||

- name: Login to DockerHub

|

||||

uses: docker/login-action@v3

|

||||

with:

|

||||

registry: ghcr.io

|

||||

username: ${{ github.actor }}

|

||||

password: ${{ github.token }}

|

||||

|

||||

- name: Checkout kestra

|

||||

uses: actions/checkout@v5

|

||||

with:

|

||||

path: kestra

|

||||

|

||||

# Setup build

|

||||

- uses: kestra-io/actions/composite/setup-build@main

|

||||

name: Setup - Build

|

||||

id: build

|

||||

with:

|

||||

java-enabled: true

|

||||

node-enabled: true

|

||||

python-enabled: true

|

||||

|

||||

- name: Install Npm dependencies

|

||||

run: |

|

||||

cd kestra/ui

|

||||

npm i

|

||||

npx playwright install --with-deps chromium

|

||||

|

||||

- name: Run E2E Tests

|

||||

run: |

|

||||

cd kestra

|

||||

sh build-and-start-e2e-tests.sh

|

||||

|

||||

- name: Upload Playwright Report as Github artifact

|

||||

# 'With this report, you can analyze locally the results of the tests. see https://playwright.dev/docs/ci-intro#html-report'

|

||||

uses: actions/upload-artifact@v4

|

||||

if: ${{ !cancelled() }}

|

||||

with:

|

||||

name: playwright-report

|

||||

path: kestra/ui/playwright-report/

|

||||

retention-days: 7

|

||||

# Allure check

|

||||

# TODO I don't know what it should do

|

||||

# - uses: rlespinasse/github-slug-action@v5

|

||||

# name: Allure - Generate slug variables

|

||||

#

|

||||

# - name: Allure - Publish report

|

||||

# uses: andrcuns/allure-publish-action@v2.9.0

|

||||

# if: always() && env.GOOGLE_SERVICE_ACCOUNT != ''

|

||||

# continue-on-error: true

|

||||

# env:

|

||||

# GITHUB_AUTH_TOKEN: ${{ secrets.GITHUB_AUTH_TOKEN }}

|

||||

# JAVA_HOME: /usr/lib/jvm/default-jvm/

|

||||

# with:

|

||||

# storageType: gcs

|

||||

# resultsGlob: "**/build/allure-results"

|

||||

# bucket: internal-kestra-host

|

||||

# baseUrl: "https://internal.dev.kestra.io"

|

||||

# prefix: ${{ format('{0}/{1}', github.repository, 'allure/java') }}

|

||||

# copyLatest: true

|

||||

# ignoreMissingResults: true

|

||||

74

.github/workflows/gradle-release-plugins.yml

vendored

74

.github/workflows/gradle-release-plugins.yml

vendored

@@ -1,74 +0,0 @@

|

||||

name: Run Gradle Release for Kestra Plugins

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

releaseVersion:

|

||||

description: 'The release version (e.g., 0.21.0)'

|

||||

required: true

|

||||

type: string

|

||||

nextVersion:

|

||||

description: 'The next version (e.g., 0.22.0-SNAPSHOT)'

|

||||

required: true

|

||||

type: string

|

||||

dryRun:

|

||||

description: 'Use DRY_RUN mode'

|

||||

required: false

|

||||

default: 'false'

|

||||

jobs:

|

||||

release:

|

||||

name: Release plugins

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

# Checkout

|

||||

- uses: actions/checkout@v5

|

||||

with:

|

||||

fetch-depth: 0

|

||||

|

||||

# Setup build

|

||||

- uses: kestra-io/actions/composite/setup-build@main

|

||||

id: build

|

||||

with:

|

||||

java-enabled: true

|

||||

node-enabled: true

|

||||

python-enabled: true

|

||||

|

||||

# Get Plugins List

|

||||

- name: Get Plugins List

|

||||

uses: ./.github/actions/plugins-list

|

||||

id: plugins-list

|

||||

with:

|

||||

plugin-version: 'LATEST'

|

||||

|

||||

- name: 'Configure Git'

|

||||

run: |

|

||||

git config --global user.email "41898282+github-actions[bot]@users.noreply.github.com"

|

||||

git config --global user.name "github-actions[bot]"

|

||||

|

||||

# Execute

|

||||

- name: Run Gradle Release

|

||||

if: ${{ github.event.inputs.dryRun == 'false' }}

|

||||

env:

|

||||

GITHUB_PAT: ${{ secrets.GH_PERSONAL_TOKEN }}

|

||||

run: |

|

||||

chmod +x ./dev-tools/release-plugins.sh;

|

||||

|

||||

./dev-tools/release-plugins.sh \

|

||||

--release-version=${{github.event.inputs.releaseVersion}} \

|

||||

--next-version=${{github.event.inputs.nextVersion}} \

|

||||

--yes \

|

||||

${{ steps.plugins-list.outputs.repositories }}

|

||||

|

||||

- name: Run Gradle Release (DRY_RUN)

|

||||

if: ${{ github.event.inputs.dryRun == 'true' }}

|

||||

env:

|

||||

GITHUB_PAT: ${{ secrets.GH_PERSONAL_TOKEN }}

|

||||

run: |

|

||||

chmod +x ./dev-tools/release-plugins.sh;

|

||||

|

||||

./dev-tools/release-plugins.sh \

|

||||

--release-version=${{github.event.inputs.releaseVersion}} \

|

||||

--next-version=${{github.event.inputs.nextVersion}} \

|

||||

--dry-run \

|

||||

--yes \

|

||||

${{ steps.plugins-list.outputs.repositories }}

|

||||

84

.github/workflows/gradle-release.yml

vendored

84

.github/workflows/gradle-release.yml

vendored

@@ -1,84 +0,0 @@

|

||||

name: Run Gradle Release

|

||||

run-name: "Releasing Kestra ${{ github.event.inputs.releaseVersion }} 🚀"

|

||||

on:

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

releaseVersion:

|

||||

description: 'The release version (e.g., 0.21.0)'

|

||||

required: true

|

||||

type: string

|

||||

nextVersion:

|

||||

description: 'The next version (e.g., 0.22.0-SNAPSHOT)'

|

||||

required: true

|

||||

type: string

|

||||

env:

|

||||

RELEASE_VERSION: "${{ github.event.inputs.releaseVersion }}"

|

||||

NEXT_VERSION: "${{ github.event.inputs.nextVersion }}"

|

||||

jobs:

|

||||

release:

|

||||

name: Release Kestra

|

||||

runs-on: ubuntu-latest

|

||||

if: github.ref == 'refs/heads/develop'

|

||||

steps:

|

||||

# Checks

|

||||

- name: Check Inputs

|

||||

run: |

|

||||

if ! [[ "$RELEASE_VERSION" =~ ^[0-9]+(\.[0-9]+)\.0$ ]]; then

|

||||

echo "Invalid release version. Must match regex: ^[0-9]+(\.[0-9]+)\.0$"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

if ! [[ "$NEXT_VERSION" =~ ^[0-9]+(\.[0-9]+)\.0-SNAPSHOT$ ]]; then

|

||||

echo "Invalid next version. Must match regex: ^[0-9]+(\.[0-9]+)\.0-SNAPSHOT$"

|

||||

exit 1;

|

||||

fi

|

||||

# Checkout

|

||||

- uses: actions/checkout@v5

|

||||

with:

|

||||

fetch-depth: 0

|

||||

path: kestra

|

||||

|

||||

# Setup build

|

||||

- uses: kestra-io/actions/composite/setup-build@main

|

||||

id: build

|

||||

with:

|

||||

java-enabled: true

|

||||

node-enabled: true

|

||||

python-enabled: true

|

||||

caches-enabled: true

|

||||

|

||||

- name: Configure Git

|

||||

run: |

|

||||

git config --global user.email "41898282+github-actions[bot]@users.noreply.github.com"

|

||||

git config --global user.name "github-actions[bot]"

|

||||

|

||||

# Execute

|

||||

- name: Run Gradle Release

|

||||

env:

|

||||

GITHUB_PAT: ${{ secrets.GH_PERSONAL_TOKEN }}

|

||||

run: |

|

||||

# Extract the major and minor versions

|

||||

BASE_VERSION=$(echo "$RELEASE_VERSION" | sed -E 's/^([0-9]+\.[0-9]+)\..*/\1/')

|

||||

PUSH_RELEASE_BRANCH="releases/v${BASE_VERSION}.x"

|

||||

|

||||

cd kestra

|

||||

|

||||

# Create and push release branch

|

||||

git checkout -b "$PUSH_RELEASE_BRANCH";

|

||||

git push -u origin "$PUSH_RELEASE_BRANCH";

|

||||

|

||||

# Run gradle release

|

||||

git checkout develop;

|

||||

|

||||

if [[ "$RELEASE_VERSION" == *"-SNAPSHOT" ]]; then

|

||||

./gradlew release -Prelease.useAutomaticVersion=true \

|

||||

-Prelease.releaseVersion="${RELEASE_VERSION}" \

|

||||

-Prelease.newVersion="${NEXT_VERSION}" \

|

||||

-Prelease.pushReleaseVersionBranch="${PUSH_RELEASE_BRANCH}" \

|

||||

-Prelease.failOnSnapshotDependencies=false

|

||||

else

|

||||

./gradlew release -Prelease.useAutomaticVersion=true \

|

||||

-Prelease.releaseVersion="${RELEASE_VERSION}" \

|

||||

-Prelease.newVersion="${NEXT_VERSION}" \

|

||||

-Prelease.pushReleaseVersionBranch="${PUSH_RELEASE_BRANCH}"

|

||||

fi

|

||||

458

.github/workflows/main.yml

vendored

458

.github/workflows/main.yml

vendored

@@ -1,76 +1,432 @@

|

||||

name: Main Workflow

|

||||

name: Main

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

skip-test:

|

||||

description: 'Skip test'

|

||||

type: choice

|

||||

required: true

|

||||

default: 'false'

|

||||

options:

|

||||

- "true"

|

||||

- "false"

|

||||

plugin-version:

|

||||

description: "plugins version"

|

||||

required: false

|

||||

type: string

|

||||

push:

|

||||

branches:

|

||||

- master

|

||||

- main

|

||||

- releases/*

|

||||

- release

|

||||

- develop

|

||||

tags:

|

||||

- v*

|

||||

|

||||

pull_request:

|

||||

branches:

|

||||

- master

|

||||

- release

|

||||

- develop

|

||||

repository_dispatch:

|

||||

types: [ rebuild ]

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

skip-test:

|

||||

description: 'Skip test'

|

||||

required: false

|

||||

type: string

|

||||

default: "false"

|

||||

|

||||

concurrency:

|

||||

group: ${{ github.workflow }}-${{ github.ref }}-main

|

||||

group: ${{ github.workflow }}-${{ github.ref }}

|

||||

cancel-in-progress: true

|

||||

|

||||

jobs:

|

||||

tests:

|

||||

name: Execute tests

|

||||

uses: ./.github/workflows/workflow-test.yml

|

||||

if: ${{ github.event.inputs.skip-test == 'false' || github.event.inputs.skip-test == '' }}

|

||||

with:

|

||||

report-status: false

|

||||

check:

|

||||

env:

|

||||

SONAR_TOKEN: ${{ secrets.SONAR_TOKEN }}

|

||||

name: Check & Publish

|

||||

runs-on: ubuntu-latest

|

||||

timeout-minutes: 60

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 0

|

||||

|

||||

release:

|

||||

name: Release

|

||||

needs: [tests]

|

||||

if: "!failure() && !cancelled() && !startsWith(github.ref, 'refs/heads/releases')"

|

||||

uses: ./.github/workflows/workflow-release.yml

|

||||

with:

|

||||

plugin-version: ${{ inputs.plugin-version != '' && inputs.plugin-version || (github.ref == 'refs/heads/develop' && 'LATEST-SNAPSHOT' || 'LATEST') }}

|

||||

secrets:

|

||||

DOCKERHUB_USERNAME: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

DOCKERHUB_PASSWORD: ${{ secrets.DOCKERHUB_PASSWORD }}

|

||||

SONATYPE_USER: ${{ secrets.SONATYPE_USER }}

|

||||

SONATYPE_PASSWORD: ${{ secrets.SONATYPE_PASSWORD }}

|

||||

SONATYPE_GPG_KEYID: ${{ secrets.SONATYPE_GPG_KEYID }}

|

||||

SONATYPE_GPG_PASSWORD: ${{ secrets.SONATYPE_GPG_PASSWORD }}

|

||||

SONATYPE_GPG_FILE: ${{ secrets.SONATYPE_GPG_FILE }}

|

||||

GH_PERSONAL_TOKEN: ${{ secrets.GH_PERSONAL_TOKEN }}

|

||||

SLACK_RELEASES_WEBHOOK_URL: ${{ secrets.SLACK_RELEASES_WEBHOOK_URL }}

|

||||

- uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version: '3.x'

|

||||

architecture: 'x64'

|

||||

- uses: actions/setup-node@v4

|

||||

with:

|

||||

node-version: '18'

|

||||

check-latest: true

|

||||

|

||||

# Services

|

||||

- name: Build the docker-compose stack

|

||||

run: docker compose -f docker-compose-ci.yml up -d

|

||||

if: ${{ github.event.inputs.skip-test == 'false' || github.event.inputs.skip-test == '' }}

|

||||

|

||||

# Caches

|

||||

- name: Gradle cache

|

||||

uses: actions/cache@v4

|

||||

with:

|

||||

path: |

|

||||

~/.gradle/caches

|

||||

~/.gradle/wrapper

|

||||

key: ${{ runner.os }}-gradle-${{ hashFiles('**/*.gradle*', '**/gradle*.properties') }}

|

||||

restore-keys: |

|

||||

${{ runner.os }}-gradle-

|

||||

|

||||

- name: Npm cache

|

||||

uses: actions/cache@v4

|

||||

with:

|

||||

path: ~/.npm

|

||||

key: ${{ runner.os }}-npm-${{ hashFiles('**/package-lock.json') }}

|

||||

restore-keys: |

|

||||

${{ runner.os }}-npm-

|

||||

|

||||

- name: Node cache

|

||||

uses: actions/cache@v4

|

||||

with:

|

||||

path: node

|

||||

key: ${{ runner.os }}-node-${{ hashFiles('ui/*.gradle') }}

|

||||

restore-keys: |

|

||||

${{ runner.os }}-node-

|

||||

|

||||

- name: SonarCloud cache

|

||||

uses: actions/cache@v4

|

||||

with:

|

||||

path: ~/.sonar/cache

|

||||

key: ${{ runner.os }}-sonar

|

||||

restore-keys: ${{ runner.os }}-sonar

|

||||

|

||||

# JDK

|

||||

- name: Set up JDK

|

||||

uses: actions/setup-java@v4

|

||||

with:

|

||||

distribution: 'temurin'

|

||||

java-version: 17

|

||||

|

||||

- name: Validate Gradle wrapper

|

||||

uses: gradle/wrapper-validation-action@v2

|

||||

|

||||

# Gradle check

|

||||

- name: Build with Gradle

|

||||

if: ${{ github.event.inputs.skip-test == 'false' || github.event.inputs.skip-test == '' }}

|

||||

env:

|

||||

GOOGLE_SERVICE_ACCOUNT: ${{ secrets.GOOGLE_SERVICE_ACCOUNT }}

|

||||

run: |

|

||||

python3 -m pip install virtualenv

|

||||

echo $GOOGLE_SERVICE_ACCOUNT | base64 -d > ~/.gcp-service-account.json

|

||||

export GOOGLE_APPLICATION_CREDENTIALS=$HOME/.gcp-service-account.json

|

||||

./gradlew check jacoco javadoc --no-daemon --priority=normal

|

||||

|

||||

# report test

|

||||

- name: Test Report

|

||||

uses: mikepenz/action-junit-report@v4

|

||||

if: success() || failure()

|

||||

with:

|

||||

report_paths: '**/build/test-results/**/TEST-*.xml'

|

||||

|

||||

- name: Analyze with Sonar

|

||||

if: ${{ env.SONAR_TOKEN != 0 }}

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

SONAR_TOKEN: ${{ secrets.SONAR_TOKEN }}

|

||||

run: ./gradlew sonar --info

|

||||

|

||||

# Codecov

|

||||

- uses: codecov/codecov-action@v4

|

||||

if: ${{ github.event.inputs.skip-test == 'false' || github.event.inputs.skip-test == '' }}

|

||||

with:

|

||||

token: ${{ secrets.CODECOV_TOKEN }}

|

||||

|

||||

# Shadow Jar

|

||||

- name: Build jars

|

||||

run: ./gradlew executableJar --no-daemon --priority=normal

|

||||

|

||||

# Upload artifacts

|

||||

- name: Upload jar

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: jar

|

||||

path: build/libs/

|

||||

|

||||

- name: Upload Executable

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: exe

|

||||

path: build/executable/

|

||||

|

||||

# GitHub Release

|

||||

- name: Create GitHub release

|

||||

uses: "marvinpinto/action-automatic-releases@latest"

|

||||

if: startsWith(github.ref, 'refs/tags/v')

|

||||

continue-on-error: true

|

||||

with:

|

||||

repo_token: "${{ secrets.GITHUB_TOKEN }}"

|

||||

prerelease: false

|

||||

files: |

|

||||

build/executable/*

|

||||

|

||||

- name: Flow to add BC

|

||||

if: startsWith(github.ref, 'refs/tags/v')

|

||||

continue-on-error: true

|

||||

run: |

|

||||

curl --location "http://18.153.185.126:8080/api/v1/executions/webhook/product/release_notes/${{secrets.KESTRA_WEBHOOK_KEY}}" \

|

||||

--header 'Content-Type: application/json'

|

||||

|

||||

docker:

|

||||

name: Publish docker

|

||||

runs-on: ubuntu-latest

|

||||

needs: check

|

||||

if: github.ref == 'refs/heads/master' || github.ref == 'refs/heads/develop' || github.ref == 'refs/heads/release' || startsWith(github.ref, 'refs/tags/v')

|

||||

strategy:

|

||||

matrix:

|

||||

image:

|

||||

- name: ""

|

||||

plugins: ""

|

||||

packages: ""

|

||||

python-libs: ""

|

||||

- name: "-full"

|

||||

plugins: >-

|

||||

io.kestra.plugin:plugin-airbyte:LATEST

|

||||

io.kestra.plugin:plugin-amqp:LATEST

|

||||

io.kestra.plugin:plugin-ansible:LATEST

|

||||

io.kestra.plugin:plugin-aws:LATEST

|

||||

io.kestra.plugin:plugin-azure:LATEST

|

||||

io.kestra.plugin:plugin-cassandra:LATEST

|

||||

io.kestra.plugin:plugin-cloudquery:LATEST

|

||||

io.kestra.plugin:plugin-compress:LATEST

|

||||

io.kestra.plugin:plugin-couchbase:LATEST

|

||||

io.kestra.plugin:plugin-crypto:LATEST

|

||||

io.kestra.plugin:plugin-databricks:LATEST

|

||||

io.kestra.plugin:plugin-dataform:LATEST

|

||||

io.kestra.plugin:plugin-dbt:LATEST

|

||||

io.kestra.plugin:plugin-debezium-mysql:LATEST

|

||||

io.kestra.plugin:plugin-debezium-postgres:LATEST

|

||||

io.kestra.plugin:plugin-debezium-sqlserver:LATEST

|

||||

io.kestra.plugin:plugin-docker:LATEST

|

||||

io.kestra.plugin:plugin-elasticsearch:LATEST

|

||||

io.kestra.plugin:plugin-fivetran:LATEST

|

||||

io.kestra.plugin:plugin-fs:LATEST

|

||||

io.kestra.plugin:plugin-gcp:LATEST

|

||||

io.kestra.plugin:plugin-git:LATEST

|

||||

io.kestra.plugin:plugin-googleworkspace:LATEST

|

||||

io.kestra.plugin:plugin-hightouch:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-as400:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-clickhouse:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-db2:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-duckdb:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-druid:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-mysql:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-oracle:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-pinot:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-postgres:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-redshift:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-rockset:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-snowflake:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-sqlserver:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-trino:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-vectorwise:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-vertica:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-dremio:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-arrow-flight:LATEST

|

||||

io.kestra.plugin:plugin-jdbc-sqlite:LATEST

|

||||

io.kestra.plugin:plugin-kafka:LATEST

|

||||

io.kestra.plugin:plugin-kubernetes:LATEST

|

||||

io.kestra.plugin:plugin-malloy:LATEST

|

||||

io.kestra.plugin:plugin-modal:LATEST

|

||||

io.kestra.plugin:plugin-mongodb:LATEST

|

||||

io.kestra.plugin:plugin-mqtt:LATEST

|

||||

io.kestra.plugin:plugin-nats:LATEST

|

||||

io.kestra.plugin:plugin-neo4j:LATEST

|

||||

io.kestra.plugin:plugin-notifications:LATEST

|

||||

io.kestra.plugin:plugin-openai:LATEST

|

||||

io.kestra.plugin:plugin-powerbi:LATEST

|

||||

io.kestra.plugin:plugin-pulsar:LATEST

|

||||

io.kestra.plugin:plugin-redis:LATEST

|

||||

io.kestra.plugin:plugin-script-groovy:LATEST

|

||||

io.kestra.plugin:plugin-script-julia:LATEST

|

||||

io.kestra.plugin:plugin-script-jython:LATEST

|

||||

io.kestra.plugin:plugin-script-nashorn:LATEST

|

||||

io.kestra.plugin:plugin-script-node:LATEST

|

||||

io.kestra.plugin:plugin-script-powershell:LATEST

|

||||

io.kestra.plugin:plugin-script-python:LATEST

|

||||

io.kestra.plugin:plugin-script-r:LATEST

|

||||

io.kestra.plugin:plugin-script-ruby:LATEST

|

||||

io.kestra.plugin:plugin-script-shell:LATEST

|

||||

io.kestra.plugin:plugin-serdes:LATEST

|

||||

io.kestra.plugin:plugin-servicenow:LATEST

|

||||

io.kestra.plugin:plugin-singer:LATEST

|

||||

io.kestra.plugin:plugin-soda:LATEST

|

||||

io.kestra.plugin:plugin-solace:LATEST

|

||||

io.kestra.plugin:plugin-spark:LATEST

|

||||

io.kestra.plugin:plugin-sqlmesh:LATEST

|

||||

io.kestra.plugin:plugin-surrealdb:LATEST

|

||||

io.kestra.plugin:plugin-terraform:LATEST

|

||||

io.kestra.plugin:plugin-tika:LATEST

|

||||

io.kestra.plugin:plugin-weaviate:LATEST

|

||||

io.kestra.storage:storage-azure:LATEST

|

||||

io.kestra.storage:storage-gcs:LATEST

|

||||

io.kestra.storage:storage-minio:LATEST

|

||||

io.kestra.storage:storage-s3:LATEST

|

||||

packages: python3 python3-venv python-is-python3 python3-pip nodejs npm curl zip unzip

|

||||

python-libs: kestra

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

|

||||

# Artifact

|

||||

- name: Download executable

|

||||

uses: actions/download-artifact@v4

|

||||

with:

|

||||

name: exe

|

||||

path: build/executable

|

||||

|

||||

- name: Copy exe to image

|

||||

run: |

|

||||

cp build/executable/* docker/app/kestra && chmod +x docker/app/kestra

|

||||

|

||||

# Vars