mirror of

https://github.com/kestra-io/kestra.git

synced 2025-12-25 11:12:12 -05:00

Compare commits

160 Commits

run-develo

...

v0.19.25

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

1bfdae7506 | ||

|

|

6a232bf7c6 | ||

|

|

f0acc68a48 | ||

|

|

18391f535f | ||

|

|

c6c2c974ee | ||

|

|

c008920845 | ||

|

|

ae2a4db153 | ||

|

|

fde37e4b30 | ||

|

|

705e834c8a | ||

|

|

8f123b1da5 | ||

|

|

259a9036b9 | ||

|

|

49fd7e411f | ||

|

|

3b5527b872 | ||

|

|

c14d9573d3 | ||

|

|

2dff7be851 | ||

|

|

9ceb633e97 | ||

|

|

434f0caf9a | ||

|

|

d00a8f549f | ||

|

|

c8a829081c | ||

|

|

86117b63b6 | ||

|

|

f3bb05a6d5 | ||

|

|

317cc02d77 | ||

|

|

b0ae3b643c | ||

|

|

338cc8c38c | ||

|

|

9231c28bb5 | ||

|

|

5a722cdb6c | ||

|

|

da47b05d61 | ||

|

|

97d1267852 | ||

|

|

afe41c1600 | ||

|

|

a12fa5b82b | ||

|

|

b3376d2183 | ||

|

|

dd6d8a7c22 | ||

|

|

cb3e73c651 | ||

|

|

f95f47ae0c | ||

|

|

e042a0a4b3 | ||

|

|

f92bfe9e98 | ||

|

|

7ef33e35f1 | ||

|

|

bc27733149 | ||

|

|

355543d4f7 | ||

|

|

12474e118a | ||

|

|

7c31e0306c | ||

|

|

3aace06d31 | ||

|

|

dcd697bcac | ||

|

|

5fc3f769da | ||

|

|

0d52d9a6a9 | ||

|

|

85f11feace | ||

|

|

523294ce8c | ||

|

|

b15bc9bacd | ||

|

|

74f28be32e | ||

|

|

2a714791a1 | ||

|

|

f9a547ed63 | ||

|

|

a817970c1c | ||

|

|

c9e8b7ea06 | ||

|

|

8f4a9e2dc8 | ||

|

|

911ae32113 | ||

|

|

928214a22b | ||

|

|

d5eafa69aa | ||

|

|

ae48352300 | ||

|

|

a59f758d28 | ||

|

|

befbefbdd9 | ||

|

|

57c7389f9e | ||

|

|

427da64744 | ||

|

|

430dc8ecee | ||

|

|

2d9c98b921 | ||

|

|

a49b406f03 | ||

|

|

2a578fe651 | ||

|

|

277bf77fb4 | ||

|

|

e6ec59443a | ||

|

|

b68b281ac0 | ||

|

|

37bf6ea8f3 | ||

|

|

71a296a814 | ||

|

|

6d8bc07f5b | ||

|

|

07974aa145 | ||

|

|

c7288bd325 | ||

|

|

96f553c1ba | ||

|

|

8389102706 | ||

|

|

16a0096c45 | ||

|

|

6327dcd51b | ||

|

|

e91beaa15f | ||

|

|

833bdb38ee | ||

|

|

0d64c74a67 | ||

|

|

4740fa3628 | ||

|

|

b29965c239 | ||

|

|

05d1eeadef | ||

|

|

acd2ce9041 | ||

|

|

a3829c3d7e | ||

|

|

17c18f94dd | ||

|

|

14daa96295 | ||

|

|

aa9aa80f0a | ||

|

|

705d17340d | ||

|

|

cf70c99e59 | ||

|

|

c5e0cddca5 | ||

|

|

4d14464191 | ||

|

|

ed12797b46 | ||

|

|

ec85a748ce | ||

|

|

3e8a63888a | ||

|

|

8d0bcc1da3 | ||

|

|

0b53f1cf25 | ||

|

|

3621aad6a1 | ||

|

|

dbb1cc5007 | ||

|

|

0d6e655b22 | ||

|

|

7a1a180fdb | ||

|

|

ce2daf52ff | ||

|

|

f086da3a2a | ||

|

|

1886a443c7 | ||

|

|

5a4e2b791d | ||

|

|

a595cecb3d | ||

|

|

472b699ca7 | ||

|

|

f55f52b43a | ||

|

|

c796308839 | ||

|

|

37a880164d | ||

|

|

5f1408c560 | ||

|

|

4186900fdb | ||

|

|

4338437a6f | ||

|

|

68ee5e4df0 | ||

|

|

2def5cf7f8 | ||

|

|

d184858abf | ||

|

|

dfa5875fa1 | ||

|

|

ac4f7f261d | ||

|

|

ae55685d2e | ||

|

|

dd34317e4f | ||

|

|

f95e3073dd | ||

|

|

9f20988997 | ||

|

|

5da3ab4f71 | ||

|

|

243eaab826 | ||

|

|

6d362d688d | ||

|

|

39a01e0e7d | ||

|

|

a44b2ef7cb | ||

|

|

6bcad13444 | ||

|

|

02acf01ea5 | ||

|

|

55193361b8 | ||

|

|

8d509a3ba5 | ||

|

|

500680bcf7 | ||

|

|

412c27cb12 | ||

|

|

8d7d9a356f | ||

|

|

d2ab2e97b4 | ||

|

|

6a0f360fc6 | ||

|

|

0484fd389a | ||

|

|

e92aac3b39 | ||

|

|

39b8ac8804 | ||

|

|

f928ed5876 | ||

|

|

54856af0a8 | ||

|

|

8bd79e82ab | ||

|

|

104a491b92 | ||

|

|

5f46a0dd16 | ||

|

|

24c3703418 | ||

|

|

e5af245855 | ||

|

|

d58e8f98a2 | ||

|

|

ce2f1bfdb3 | ||

|

|

b619f88eff | ||

|

|

1f1775752b | ||

|

|

b2475e53a2 | ||

|

|

7e8956a0b7 | ||

|

|

6537ee984b | ||

|

|

573aa48237 | ||

|

|

66ddeaa219 | ||

|

|

02c5e8a1a2 | ||

|

|

733c7897b9 | ||

|

|

c051287688 | ||

|

|

1af8de6bce |

19

.github/CONTRIBUTING.md

vendored

19

.github/CONTRIBUTING.md

vendored

@@ -52,14 +52,17 @@ The backend is made with [Micronaut](https://micronaut.io).

|

||||

Open the cloned repository in your favorite IDE. In most of decent IDEs, Gradle build will be detected and all dependencies will be downloaded.

|

||||

You can also build it from a terminal using `./gradlew build`, the Gradle wrapper will download the right Gradle version to use.

|

||||

|

||||

- You may need to enable java annotation processors since we are using it a lot.

|

||||

- The main class is `io.kestra.cli.App` from module `kestra.cli.main`

|

||||

- Pass as program arguments the server you want to develop, for example `server local` will start the [standalone local](https://kestra.io/docs/administrator-guide/server-cli#kestra-local-development-server-with-no-dependencies)

|

||||

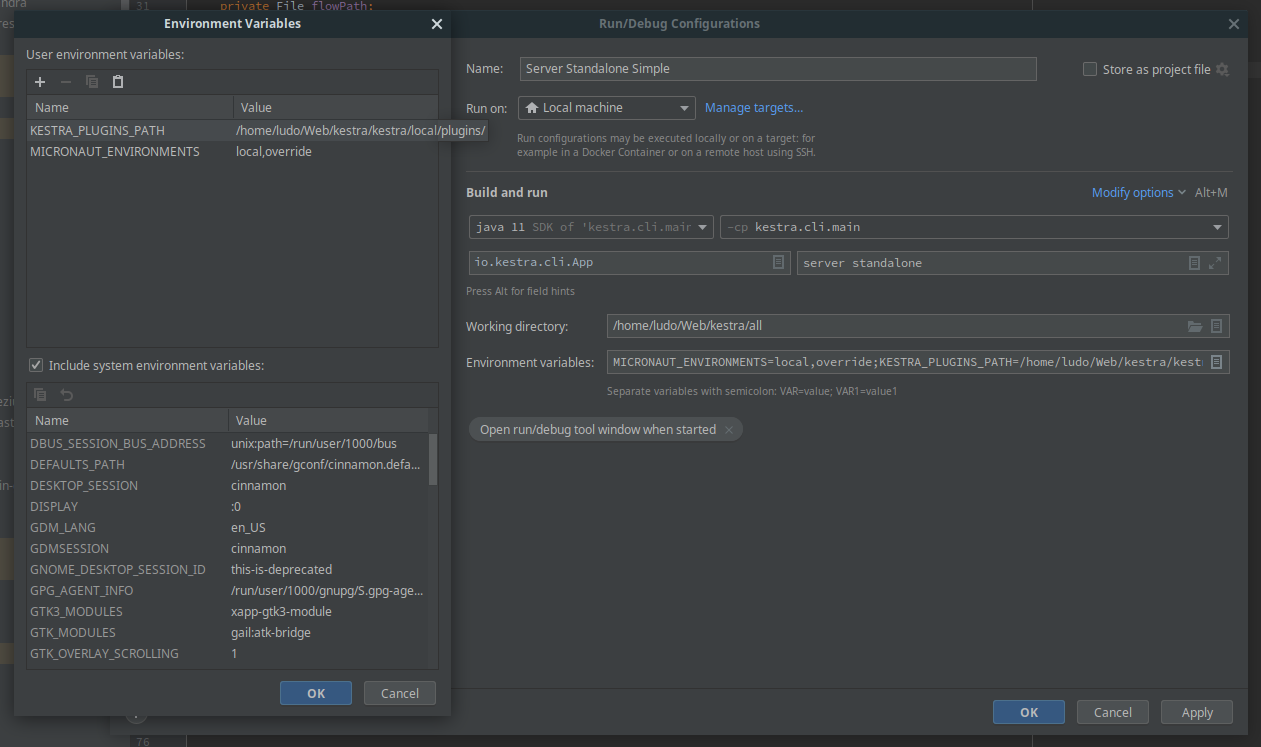

-  Intellij Idea configuration can be found in screenshot below.

|

||||

- `MICRONAUT_ENVIRONMENTS`: can be set any string and will load a custom configuration file in `cli/src/main/resources/application-{env}.yml`

|

||||

- `KESTRA_PLUGINS_PATH`: is the path where you will save plugins as Jar and will be load on the startup.

|

||||

- You can also use the gradle task `./gradlew runLocal` that will run a standalone server with `MICRONAUT_ENVIRONMENTS=override` and plugins path `local/plugins`

|

||||

- The server start by default on port 8080 and is reachable on `http://localhost:8080`

|

||||

- You may need to enable java annotation processors since we are using them.

|

||||

- On IntelliJ IDEA, click on **Run -> Edit Configurations -> + Add new Configuration** to create a run configuration to start Kestra.

|

||||

- The main class is `io.kestra.cli.App` from module `kestra.cli.main`.

|

||||

- Pass as program arguments the server you want to work with, for example `server local` will start the [standalone local](https://kestra.io/docs/administrator-guide/server-cli#kestra-local-development-server-with-no-dependencies). You can also use `server standalone` and use the provided `docker-compose-ci.yml` Docker compose file to start a standalone server with a real database as a backend that would need to be configured properly.

|

||||

- Configure the following environment variables:

|

||||

- `MICRONAUT_ENVIRONMENTS`: can be set to any string and will load a custom configuration file in `cli/src/main/resources/application-{env}.yml`.

|

||||

- `KESTRA_PLUGINS_PATH`: is the path where you will save plugins as Jar and will be load on startup.

|

||||

- See the screenshot bellow for an example:

|

||||

- If you encounter **JavaScript memory heap out** error during startup, configure `NODE_OPTIONS` environment variable with some large value.

|

||||

- Example `NODE_OPTIONS: --max-old-space-size=4096` or `NODE_OPTIONS: --max-old-space-size=8192`

|

||||

- The server starts by default on port 8080 and is reachable on `http://localhost:8080`

|

||||

|

||||

If you want to launch all tests, you need Python and some packages installed on your machine, on Ubuntu you can install them with:

|

||||

|

||||

|

||||

BIN

.github/node_option_env_var.png

vendored

Normal file

BIN

.github/node_option_env_var.png

vendored

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 130 KiB |

5

.github/workflows/docker.yml

vendored

5

.github/workflows/docker.yml

vendored

@@ -77,6 +77,11 @@ jobs:

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v3

|

||||

|

||||

- name: Docker - Fix Qemu

|

||||

shell: bash

|

||||

run: |

|

||||

docker run --rm --privileged multiarch/qemu-user-static --reset -p yes -c yes

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v3

|

||||

|

||||

|

||||

122

.github/workflows/generate_translations.yml

vendored

122

.github/workflows/generate_translations.yml

vendored

@@ -1,45 +1,111 @@

|

||||

name: Generate Translations

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

types: [opened, synchronize]

|

||||

paths:

|

||||

- "ui/src/translations/en.json"

|

||||

|

||||

push:

|

||||

branches:

|

||||

- develop

|

||||

paths:

|

||||

- 'ui/src/translations/en.json'

|

||||

|

||||

env:

|

||||

OPENAI_API_KEY: ${{ secrets.OPENAI_API_KEY }}

|

||||

|

||||

jobs:

|

||||

generate-translations:

|

||||

name: Generate Translations and Create PR

|

||||

commit:

|

||||

name: Commit directly to PR

|

||||

runs-on: ubuntu-latest

|

||||

if: ${{ github.event.pull_request.head.repo.fork == false }}

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 10 # Ensures that at least 10 commits are fetched for comparison

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 10

|

||||

ref: ${{ github.head_ref }}

|

||||

|

||||

- name: Set up Python

|

||||

uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version: '3.x'

|

||||

- name: Set up Python

|

||||

uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version: "3.x"

|

||||

|

||||

- name: Install dependencies

|

||||

run: pip install gitpython openai

|

||||

- name: Install Python dependencies

|

||||

run: pip install gitpython openai

|

||||

|

||||

- name: Generate translations

|

||||

run: python ui/src/translations/generate_translations.py

|

||||

- name: Generate translations

|

||||

run: python ui/src/translations/generate_translations.py

|

||||

|

||||

- name: Commit, push changes, and create PR

|

||||

env:

|

||||

GH_TOKEN: ${{ github.token }}

|

||||

run: |

|

||||

git config --global user.name "GitHub Action"

|

||||

git config --global user.email "actions@github.com"

|

||||

BRANCH_NAME="translations/update-translations-$(date +%s)"

|

||||

git checkout -b $BRANCH_NAME

|

||||

git add ui/src/translations/*.json

|

||||

git commit -m "Auto-generate translations from en.json"

|

||||

git push --set-upstream origin $BRANCH_NAME

|

||||

gh pr create --title "Auto-generate translations from en.json" --body "This PR was created automatically by a GitHub Action." --base develop --head $BRANCH_NAME --assignee anna-geller --reviewer anna-geller

|

||||

- name: Set up Node

|

||||

uses: actions/setup-node@v4

|

||||

with:

|

||||

node-version: "20.x"

|

||||

|

||||

- name: Check keys matching

|

||||

run: node ui/src/translations/check.js

|

||||

|

||||

- name: Set up Git

|

||||

run: |

|

||||

git config --global user.name "GitHub Action"

|

||||

git config --global user.email "actions@github.com"

|

||||

|

||||

- name: Check for changes and commit

|

||||

env:

|

||||

GH_TOKEN: ${{ github.token }}

|

||||

run: |

|

||||

git add ui/src/translations/*.json

|

||||

if git diff --cached --quiet; then

|

||||

echo "No changes to commit. Exiting with success."

|

||||

exit 0

|

||||

fi

|

||||

git commit -m "chore(translations): auto generate values for languages other than english"

|

||||

git push origin ${{ github.head_ref }}

|

||||

|

||||

pull_request:

|

||||

name: Open PR for a forked repository

|

||||

runs-on: ubuntu-latest

|

||||

if: ${{ github.event.pull_request.head.repo.fork == true }}

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 10

|

||||

|

||||

- name: Set up Python

|

||||

uses: actions/setup-python@v5

|

||||

with:

|

||||

python-version: "3.x"

|

||||

|

||||

- name: Install Python dependencies

|

||||

run: pip install gitpython openai

|

||||

|

||||

- name: Generate translations

|

||||

run: python ui/src/translations/generate_translations.py

|

||||

|

||||

- name: Set up Node

|

||||

uses: actions/setup-node@v4

|

||||

with:

|

||||

node-version: "20.x"

|

||||

|

||||

- name: Check keys matching

|

||||

run: node ui/src/translations/check.js

|

||||

|

||||

- name: Set up Git

|

||||

run: |

|

||||

git config --global user.name "GitHub Action"

|

||||

git config --global user.email "actions@github.com"

|

||||

|

||||

- name: Create and push a new branch

|

||||

env:

|

||||

GH_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

run: |

|

||||

BRANCH_NAME="generated-translations-${{ github.event.pull_request.head.repo.name }}"

|

||||

|

||||

git checkout -b $BRANCH_NAME

|

||||

git add ui/src/translations/*.json

|

||||

if git diff --cached --quiet; then

|

||||

echo "No changes to commit. Exiting with success."

|

||||

exit 0

|

||||

fi

|

||||

git commit -m "chore(translations): auto generate values for languages other than english"

|

||||

git push origin $BRANCH_NAME

|

||||

|

||||

32

.github/workflows/main.yml

vendored

32

.github/workflows/main.yml

vendored

@@ -68,6 +68,7 @@ jobs:

|

||||

# Get Plugins List

|

||||

- name: Get Plugins List

|

||||

uses: ./.github/actions/plugins-list

|

||||

if: "!startsWith(github.ref, 'refs/tags/v')"

|

||||

id: plugins-list

|

||||

with:

|

||||

plugin-version: ${{ env.PLUGIN_VERSION }}

|

||||

@@ -75,6 +76,7 @@ jobs:

|

||||

# Set Plugins List

|

||||

- name: Set Plugin List

|

||||

id: plugins

|

||||

if: "!startsWith(github.ref, 'refs/tags/v')"

|

||||

run: |

|

||||

PLUGINS="${{ steps.plugins-list.outputs.plugins }}"

|

||||

TAG=${GITHUB_REF#refs/*/}

|

||||

@@ -122,6 +124,7 @@ jobs:

|

||||

# Docker Build

|

||||

- name: Build & Export Docker Image

|

||||

uses: docker/build-push-action@v6

|

||||

if: "!startsWith(github.ref, 'refs/tags/v')"

|

||||

with:

|

||||

context: .

|

||||

push: false

|

||||

@@ -149,6 +152,7 @@ jobs:

|

||||

|

||||

- name: Upload Docker

|

||||

uses: actions/upload-artifact@v4

|

||||

if: "!startsWith(github.ref, 'refs/tags/v')"

|

||||

with:

|

||||

name: ${{ steps.vars.outputs.artifact }}

|

||||

path: /tmp/${{ steps.vars.outputs.artifact }}.tar

|

||||

@@ -156,7 +160,7 @@ jobs:

|

||||

check-e2e:

|

||||

name: Check E2E Tests

|

||||

needs: build-artifacts

|

||||

if: ${{ github.event.inputs.skip-test == 'false' || github.event.inputs.skip-test == '' }}

|

||||

if: ${{ (github.event.inputs.skip-test == 'false' || github.event.inputs.skip-test == '') && !startsWith(github.ref, 'refs/tags/v') }}

|

||||

uses: ./.github/workflows/e2e.yml

|

||||

strategy:

|

||||

fail-fast: false

|

||||

@@ -214,13 +218,13 @@ jobs:

|

||||

export GOOGLE_APPLICATION_CREDENTIALS=$HOME/.gcp-service-account.json

|

||||

./gradlew check javadoc --parallel

|

||||

|

||||

# Sonar

|

||||

- name: Analyze with Sonar

|

||||

if: ${{ env.SONAR_TOKEN != 0 && (github.event.inputs.skip-test == 'false' || github.event.inputs.skip-test == '') }}

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

SONAR_TOKEN: ${{ secrets.SONAR_TOKEN }}

|

||||

run: ./gradlew sonar --info

|

||||

# # Sonar

|

||||

# - name: Analyze with Sonar

|

||||

# if: ${{ env.SONAR_TOKEN != 0 && (github.event.inputs.skip-test == 'false' || github.event.inputs.skip-test == '') }}

|

||||

# env:

|

||||

# GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

# SONAR_TOKEN: ${{ secrets.SONAR_TOKEN }}

|

||||

# run: ./gradlew sonar --info

|

||||

|

||||

# Allure check

|

||||

- name: Auth to Google Cloud

|

||||

@@ -276,7 +280,11 @@ jobs:

|

||||

name: Github Release

|

||||

runs-on: ubuntu-latest

|

||||

needs: [ check, check-e2e ]

|

||||

if: startsWith(github.ref, 'refs/tags/v')

|

||||

if: |

|

||||

always() &&

|

||||

startsWith(github.ref, 'refs/tags/v') &&

|

||||

needs.check.result == 'success' &&

|

||||

(needs.check-e2e.result == 'skipped' || needs.check-e2e.result == 'success')

|

||||

steps:

|

||||

# Download Exec

|

||||

- name: Download executable

|

||||

@@ -368,7 +376,11 @@ jobs:

|

||||

name: Publish to Maven

|

||||

runs-on: ubuntu-latest

|

||||

needs: [check, check-e2e]

|

||||

if: github.ref == 'refs/heads/develop' || startsWith(github.ref, 'refs/tags/v')

|

||||

if: |

|

||||

always() &&

|

||||

github.ref == 'refs/heads/develop' || startsWith(github.ref, 'refs/tags/v') &&

|

||||

needs.check.result == 'success' &&

|

||||

(needs.check-e2e.result == 'skipped' || needs.check-e2e.result == 'success')

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

|

||||

|

||||

@@ -179,6 +179,8 @@ subprojects {

|

||||

testImplementation 'org.hamcrest:hamcrest'

|

||||

testImplementation 'org.hamcrest:hamcrest-library'

|

||||

testImplementation 'org.exparity:hamcrest-date'

|

||||

|

||||

testImplementation 'org.assertj:assertj-core'

|

||||

}

|

||||

|

||||

test {

|

||||

|

||||

@@ -124,6 +124,7 @@ kestra:

|

||||

delay: 1s

|

||||

maxDelay: ""

|

||||

|

||||

jdbc:

|

||||

queues:

|

||||

min-poll-interval: 25ms

|

||||

max-poll-interval: 1000ms

|

||||

|

||||

@@ -19,58 +19,60 @@ import org.apache.commons.lang3.ArrayUtils;

|

||||

@Singleton

|

||||

@Slf4j

|

||||

public class MetricRegistry {

|

||||

public final static String METRIC_WORKER_JOB_PENDING_COUNT = "worker.job.pending";

|

||||

public final static String METRIC_WORKER_JOB_RUNNING_COUNT = "worker.job.running";

|

||||

public final static String METRIC_WORKER_JOB_THREAD_COUNT = "worker.job.thread";

|

||||

public final static String METRIC_WORKER_RUNNING_COUNT = "worker.running.count";

|

||||

public final static String METRIC_WORKER_QUEUED_DURATION = "worker.queued.duration";

|

||||

public final static String METRIC_WORKER_STARTED_COUNT = "worker.started.count";

|

||||

public final static String METRIC_WORKER_TIMEOUT_COUNT = "worker.timeout.count";

|

||||

public final static String METRIC_WORKER_ENDED_COUNT = "worker.ended.count";

|

||||

public final static String METRIC_WORKER_ENDED_DURATION = "worker.ended.duration";

|

||||

public final static String METRIC_WORKER_TRIGGER_DURATION = "worker.trigger.duration";

|

||||

public final static String METRIC_WORKER_TRIGGER_RUNNING_COUNT = "worker.trigger.running.count";

|

||||

public final static String METRIC_WORKER_TRIGGER_STARTED_COUNT = "worker.trigger.started.count";

|

||||

public final static String METRIC_WORKER_TRIGGER_ENDED_COUNT = "worker.trigger.ended.count";

|

||||

public final static String METRIC_WORKER_TRIGGER_ERROR_COUNT = "worker.trigger.error.count";

|

||||

public final static String METRIC_WORKER_TRIGGER_EXECUTION_COUNT = "worker.trigger.execution.count";

|

||||

public static final String METRIC_WORKER_JOB_PENDING_COUNT = "worker.job.pending";

|

||||

public static final String METRIC_WORKER_JOB_RUNNING_COUNT = "worker.job.running";

|

||||

public static final String METRIC_WORKER_JOB_THREAD_COUNT = "worker.job.thread";

|

||||

public static final String METRIC_WORKER_RUNNING_COUNT = "worker.running.count";

|

||||

public static final String METRIC_WORKER_QUEUED_DURATION = "worker.queued.duration";

|

||||

public static final String METRIC_WORKER_STARTED_COUNT = "worker.started.count";

|

||||

public static final String METRIC_WORKER_TIMEOUT_COUNT = "worker.timeout.count";

|

||||

public static final String METRIC_WORKER_ENDED_COUNT = "worker.ended.count";

|

||||

public static final String METRIC_WORKER_ENDED_DURATION = "worker.ended.duration";

|

||||

public static final String METRIC_WORKER_TRIGGER_DURATION = "worker.trigger.duration";

|

||||

public static final String METRIC_WORKER_TRIGGER_RUNNING_COUNT = "worker.trigger.running.count";

|

||||

public static final String METRIC_WORKER_TRIGGER_STARTED_COUNT = "worker.trigger.started.count";

|

||||

public static final String METRIC_WORKER_TRIGGER_ENDED_COUNT = "worker.trigger.ended.count";

|

||||

public static final String METRIC_WORKER_TRIGGER_ERROR_COUNT = "worker.trigger.error.count";

|

||||

public static final String METRIC_WORKER_TRIGGER_EXECUTION_COUNT = "worker.trigger.execution.count";

|

||||

|

||||

public final static String EXECUTOR_TASKRUN_NEXT_COUNT = "executor.taskrun.next.count";

|

||||

public final static String EXECUTOR_TASKRUN_ENDED_COUNT = "executor.taskrun.ended.count";

|

||||

public final static String EXECUTOR_TASKRUN_ENDED_DURATION = "executor.taskrun.ended.duration";

|

||||

public final static String EXECUTOR_WORKERTASKRESULT_COUNT = "executor.workertaskresult.count";

|

||||

public final static String EXECUTOR_EXECUTION_STARTED_COUNT = "executor.execution.started.count";

|

||||

public final static String EXECUTOR_EXECUTION_END_COUNT = "executor.execution.end.count";

|

||||

public final static String EXECUTOR_EXECUTION_DURATION = "executor.execution.duration";

|

||||

public static final String EXECUTOR_TASKRUN_NEXT_COUNT = "executor.taskrun.next.count";

|

||||

public static final String EXECUTOR_TASKRUN_ENDED_COUNT = "executor.taskrun.ended.count";

|

||||

public static final String EXECUTOR_TASKRUN_ENDED_DURATION = "executor.taskrun.ended.duration";

|

||||

public static final String EXECUTOR_WORKERTASKRESULT_COUNT = "executor.workertaskresult.count";

|

||||

public static final String EXECUTOR_EXECUTION_STARTED_COUNT = "executor.execution.started.count";

|

||||

public static final String EXECUTOR_EXECUTION_END_COUNT = "executor.execution.end.count";

|

||||

public static final String EXECUTOR_EXECUTION_DURATION = "executor.execution.duration";

|

||||

|

||||

public final static String METRIC_INDEXER_REQUEST_COUNT = "indexer.request.count";

|

||||

public final static String METRIC_INDEXER_REQUEST_DURATION = "indexer.request.duration";

|

||||

public final static String METRIC_INDEXER_REQUEST_RETRY_COUNT = "indexer.request.retry.count";

|

||||

public final static String METRIC_INDEXER_SERVER_DURATION = "indexer.server.duration";

|

||||

public final static String METRIC_INDEXER_MESSAGE_FAILED_COUNT = "indexer.message.failed.count";

|

||||

public final static String METRIC_INDEXER_MESSAGE_IN_COUNT = "indexer.message.in.count";

|

||||

public final static String METRIC_INDEXER_MESSAGE_OUT_COUNT = "indexer.message.out.count";

|

||||

public static final String METRIC_INDEXER_REQUEST_COUNT = "indexer.request.count";

|

||||

public static final String METRIC_INDEXER_REQUEST_DURATION = "indexer.request.duration";

|

||||

public static final String METRIC_INDEXER_REQUEST_RETRY_COUNT = "indexer.request.retry.count";

|

||||

public static final String METRIC_INDEXER_SERVER_DURATION = "indexer.server.duration";

|

||||

public static final String METRIC_INDEXER_MESSAGE_FAILED_COUNT = "indexer.message.failed.count";

|

||||

public static final String METRIC_INDEXER_MESSAGE_IN_COUNT = "indexer.message.in.count";

|

||||

public static final String METRIC_INDEXER_MESSAGE_OUT_COUNT = "indexer.message.out.count";

|

||||

|

||||

public final static String SCHEDULER_LOOP_COUNT = "scheduler.loop.count";

|

||||

public final static String SCHEDULER_TRIGGER_COUNT = "scheduler.trigger.count";

|

||||

public final static String SCHEDULER_TRIGGER_DELAY_DURATION = "scheduler.trigger.delay.duration";

|

||||

public final static String SCHEDULER_EVALUATE_COUNT = "scheduler.evaluate.count";

|

||||

public final static String SCHEDULER_EXECUTION_RUNNING_DURATION = "scheduler.execution.running.duration";

|

||||

public final static String SCHEDULER_EXECUTION_MISSING_DURATION = "scheduler.execution.missing.duration";

|

||||

public static final String SCHEDULER_LOOP_COUNT = "scheduler.loop.count";

|

||||

public static final String SCHEDULER_TRIGGER_COUNT = "scheduler.trigger.count";

|

||||

public static final String SCHEDULER_TRIGGER_DELAY_DURATION = "scheduler.trigger.delay.duration";

|

||||

public static final String SCHEDULER_EVALUATE_COUNT = "scheduler.evaluate.count";

|

||||

public static final String SCHEDULER_EXECUTION_RUNNING_DURATION = "scheduler.execution.running.duration";

|

||||

public static final String SCHEDULER_EXECUTION_MISSING_DURATION = "scheduler.execution.missing.duration";

|

||||

|

||||

public final static String STREAMS_STATE_COUNT = "stream.state.count";

|

||||

public static final String STREAMS_STATE_COUNT = "stream.state.count";

|

||||

|

||||

public static final String JDBC_QUERY_DURATION = "jdbc.query.duration";

|

||||

|

||||

public final static String JDBC_QUERY_DURATION = "jdbc.query.duration";

|

||||

public static final String QUEUE_BIG_MESSAGE_COUNT = "queue.big_message.count";

|

||||

|

||||

public final static String TAG_TASK_TYPE = "task_type";

|

||||

public final static String TAG_TRIGGER_TYPE = "trigger_type";

|

||||

public final static String TAG_FLOW_ID = "flow_id";

|

||||

public final static String TAG_NAMESPACE_ID = "namespace_id";

|

||||

public final static String TAG_STATE = "state";

|

||||

public final static String TAG_ATTEMPT_COUNT = "attempt_count";

|

||||

public final static String TAG_WORKER_GROUP = "worker_group";

|

||||

public final static String TAG_TENANT_ID = "tenant_id";

|

||||

public static final String TAG_TASK_TYPE = "task_type";

|

||||

public static final String TAG_TRIGGER_TYPE = "trigger_type";

|

||||

public static final String TAG_FLOW_ID = "flow_id";

|

||||

public static final String TAG_NAMESPACE_ID = "namespace_id";

|

||||

public static final String TAG_STATE = "state";

|

||||

public static final String TAG_ATTEMPT_COUNT = "attempt_count";

|

||||

public static final String TAG_WORKER_GROUP = "worker_group";

|

||||

public static final String TAG_TENANT_ID = "tenant_id";

|

||||

public static final String TAG_CLASS_NAME = "class_name";

|

||||

|

||||

@Inject

|

||||

private MeterRegistry meterRegistry;

|

||||

|

||||

@@ -0,0 +1,150 @@

|

||||

package io.kestra.core.models.collectors;

|

||||

|

||||

import com.google.common.annotations.VisibleForTesting;

|

||||

import io.kestra.core.repositories.ServiceInstanceRepositoryInterface;

|

||||

import io.kestra.core.server.Service;

|

||||

import io.kestra.core.server.ServiceInstance;

|

||||

|

||||

import java.math.BigDecimal;

|

||||

import java.math.RoundingMode;

|

||||

import java.time.Duration;

|

||||

import java.time.Instant;

|

||||

import java.time.LocalDate;

|

||||

import java.time.ZoneId;

|

||||

import java.util.ArrayList;

|

||||

import java.util.Arrays;

|

||||

import java.util.List;

|

||||

import java.util.LongSummaryStatistics;

|

||||

import java.util.Map;

|

||||

import java.util.function.Function;

|

||||

import java.util.stream.Collectors;

|

||||

import java.util.stream.Stream;

|

||||

|

||||

/**

|

||||

* Statistics about the number of running services over a given period.

|

||||

*/

|

||||

public record ServiceUsage(

|

||||

List<DailyServiceStatistics> dailyStatistics

|

||||

) {

|

||||

|

||||

/**

|

||||

* Daily statistics for a specific service type.

|

||||

*

|

||||

* @param type The service type.

|

||||

* @param values The statistic values.

|

||||

*/

|

||||

public record DailyServiceStatistics(

|

||||

String type,

|

||||

List<DailyStatistics> values

|

||||

) {

|

||||

}

|

||||

|

||||

/**

|

||||

* Statistics about the number of services running at any given time interval (e.g., 15 minutes) over a day.

|

||||

*

|

||||

* @param date The {@link LocalDate}.

|

||||

* @param min The minimum number of services.

|

||||

* @param max The maximum number of services.

|

||||

* @param avg The average number of services.

|

||||

*/

|

||||

public record DailyStatistics(

|

||||

LocalDate date,

|

||||

long min,

|

||||

long max,

|

||||

long avg

|

||||

) {

|

||||

}

|

||||

public static ServiceUsage of(final Instant from,

|

||||

final Instant to,

|

||||

final ServiceInstanceRepositoryInterface repository,

|

||||

final Duration interval) {

|

||||

|

||||

List<DailyServiceStatistics> statistics = Arrays

|

||||

.stream(Service.ServiceType.values())

|

||||

.map(type -> of(from, to, repository, type, interval))

|

||||

.toList();

|

||||

return new ServiceUsage(statistics);

|

||||

}

|

||||

|

||||

private static DailyServiceStatistics of(final Instant from,

|

||||

final Instant to,

|

||||

final ServiceInstanceRepositoryInterface repository,

|

||||

final Service.ServiceType serviceType,

|

||||

final Duration interval) {

|

||||

return of(serviceType, interval, repository.findAllInstancesBetween(serviceType, from, to));

|

||||

}

|

||||

|

||||

@VisibleForTesting

|

||||

static DailyServiceStatistics of(final Service.ServiceType serviceType,

|

||||

final Duration interval,

|

||||

final List<ServiceInstance> instances) {

|

||||

// Compute the number of running service per time-interval.

|

||||

final long timeIntervalInMillis = interval.toMillis();

|

||||

|

||||

final Map<Long, Long> aggregatePerTimeIntervals = instances

|

||||

.stream()

|

||||

.flatMap(instance -> {

|

||||

List<ServiceInstance.TimestampedEvent> events = instance.events();

|

||||

long start = 0;

|

||||

long end = 0;

|

||||

for (ServiceInstance.TimestampedEvent event : events) {

|

||||

long epochMilli = event.ts().toEpochMilli();

|

||||

if (event.state().equals(Service.ServiceState.RUNNING)) {

|

||||

start = epochMilli;

|

||||

}

|

||||

else if (event.state().equals(Service.ServiceState.NOT_RUNNING) && end == 0) {

|

||||

end = epochMilli;

|

||||

}

|

||||

else if (event.state().equals(Service.ServiceState.TERMINATED_GRACEFULLY)) {

|

||||

end = epochMilli; // more precise than NOT_RUNNING

|

||||

}

|

||||

else if (event.state().equals(Service.ServiceState.TERMINATED_FORCED)) {

|

||||

end = epochMilli; // more precise than NOT_RUNNING

|

||||

}

|

||||

}

|

||||

|

||||

if (instance.state().equals(Service.ServiceState.RUNNING)) {

|

||||

end = Instant.now().toEpochMilli();

|

||||

}

|

||||

|

||||

if (start != 0 && end != 0) {

|

||||

// align to epoch-time by removing precision.

|

||||

start = (start / timeIntervalInMillis) * timeIntervalInMillis;

|

||||

|

||||

// approximate the number of time interval for the current service

|

||||

int intervals = (int) ((end - start) / timeIntervalInMillis);

|

||||

|

||||

// compute all time intervals

|

||||

List<Long> keys = new ArrayList<>(intervals);

|

||||

while (start < end) {

|

||||

keys.add(start);

|

||||

start = start + timeIntervalInMillis; // Next window

|

||||

}

|

||||

return keys.stream();

|

||||

}

|

||||

return Stream.empty(); // invalid service

|

||||

})

|

||||

.collect(Collectors.groupingBy(Function.identity(), Collectors.counting()));

|

||||

|

||||

// Aggregate per day

|

||||

List<DailyStatistics> dailyStatistics = aggregatePerTimeIntervals.entrySet()

|

||||

.stream()

|

||||

.collect(Collectors.groupingBy(entry -> {

|

||||

Long epochTimeMilli = entry.getKey();

|

||||

return Instant.ofEpochMilli(epochTimeMilli).atZone(ZoneId.systemDefault()).toLocalDate();

|

||||

}, Collectors.toList()))

|

||||

.entrySet()

|

||||

.stream()

|

||||

.map(entry -> {

|

||||

LongSummaryStatistics statistics = entry.getValue().stream().collect(Collectors.summarizingLong(Map.Entry::getValue));

|

||||

return new DailyStatistics(

|

||||

entry.getKey(),

|

||||

statistics.getMin(),

|

||||

statistics.getMax(),

|

||||

BigDecimal.valueOf(statistics.getAverage()).setScale(2, RoundingMode.HALF_EVEN).longValue()

|

||||

);

|

||||

})

|

||||

.toList();

|

||||

return new DailyServiceStatistics(serviceType.name(), dailyStatistics);

|

||||

}

|

||||

}

|

||||

@@ -62,4 +62,8 @@ public class Usage {

|

||||

|

||||

@Valid

|

||||

private final ExecutionUsage executions;

|

||||

|

||||

@Valid

|

||||

@Nullable

|

||||

private ServiceUsage services;

|

||||

}

|

||||

|

||||

@@ -358,4 +358,8 @@ public class Flow extends AbstractFlow {

|

||||

.deleted(true)

|

||||

.build();

|

||||

}

|

||||

|

||||

public FlowWithSource withSource(String source) {

|

||||

return FlowWithSource.of(this, source);

|

||||

}

|

||||

}

|

||||

|

||||

@@ -1,6 +1,5 @@

|

||||

package io.kestra.core.models.flows;

|

||||

|

||||

import com.fasterxml.jackson.annotation.JsonInclude;

|

||||

import com.fasterxml.jackson.annotation.JsonSetter;

|

||||

import com.fasterxml.jackson.annotation.JsonSubTypes;

|

||||

import com.fasterxml.jackson.annotation.JsonTypeInfo;

|

||||

@@ -43,7 +42,6 @@ import lombok.experimental.SuperBuilder;

|

||||

@JsonSubTypes.Type(value = MultiselectInput.class, name = "MULTISELECT"),

|

||||

@JsonSubTypes.Type(value = YamlInput.class, name = "YAML")

|

||||

})

|

||||

@JsonInclude(JsonInclude.Include.NON_DEFAULT)

|

||||

public abstract class Input<T> implements Data {

|

||||

@Schema(

|

||||

title = "The ID of the input."

|

||||

|

||||

@@ -4,10 +4,7 @@ import com.fasterxml.jackson.core.JsonGenerator;

|

||||

import com.fasterxml.jackson.core.JsonParser;

|

||||

import com.fasterxml.jackson.core.JsonProcessingException;

|

||||

import com.fasterxml.jackson.core.type.TypeReference;

|

||||

import com.fasterxml.jackson.databind.DeserializationContext;

|

||||

import com.fasterxml.jackson.databind.JavaType;

|

||||

import com.fasterxml.jackson.databind.ObjectMapper;

|

||||

import com.fasterxml.jackson.databind.SerializerProvider;

|

||||

import com.fasterxml.jackson.databind.*;

|

||||

import com.fasterxml.jackson.databind.annotation.JsonDeserialize;

|

||||

import com.fasterxml.jackson.databind.annotation.JsonSerialize;

|

||||

import com.fasterxml.jackson.databind.deser.std.StdDeserializer;

|

||||

@@ -25,6 +22,7 @@ import java.io.IOException;

|

||||

import java.io.Serial;

|

||||

import java.util.List;

|

||||

import java.util.Map;

|

||||

import java.util.Objects;

|

||||

|

||||

/**

|

||||

* Define a plugin properties that will be rendered and converted to a target type at use time.

|

||||

@@ -37,7 +35,12 @@ import java.util.Map;

|

||||

@NoArgsConstructor

|

||||

@AllArgsConstructor(access = AccessLevel.PACKAGE)

|

||||

public class Property<T> {

|

||||

private static final ObjectMapper MAPPER = JacksonMapper.ofJson();

|

||||

// By default, durations are stored as numbers.

|

||||

// We cannot change that globally, as in JDBC/Elastic 'execution.state.duration' must be a number to be able to aggregate them.

|

||||

// So we only change it here.

|

||||

private static final ObjectMapper MAPPER = JacksonMapper.ofJson()

|

||||

.copy()

|

||||

.configure(SerializationFeature.WRITE_DURATIONS_AS_TIMESTAMPS, false);

|

||||

|

||||

private String expression;

|

||||

private T value;

|

||||

@@ -185,6 +188,18 @@ public class Property<T> {

|

||||

return value != null ? value.toString() : expression;

|

||||

}

|

||||

|

||||

@Override

|

||||

public boolean equals(Object o) {

|

||||

if (o == null || getClass() != o.getClass()) return false;

|

||||

Property<?> property = (Property<?>) o;

|

||||

return Objects.equals(expression, property.expression);

|

||||

}

|

||||

|

||||

@Override

|

||||

public int hashCode() {

|

||||

return Objects.hash(expression);

|

||||

}

|

||||

|

||||

// used only by the serializer

|

||||

String getExpression() {

|

||||

return this.expression;

|

||||

|

||||

@@ -23,9 +23,6 @@ public class Trigger extends TriggerContext {

|

||||

@Nullable

|

||||

private String executionId;

|

||||

|

||||

@Nullable

|

||||

private State.Type executionCurrentState;

|

||||

|

||||

@Nullable

|

||||

private Instant updatedDate;

|

||||

|

||||

@@ -39,7 +36,6 @@ public class Trigger extends TriggerContext {

|

||||

protected Trigger(TriggerBuilder<?, ?> b) {

|

||||

super(b);

|

||||

this.executionId = b.executionId;

|

||||

this.executionCurrentState = b.executionCurrentState;

|

||||

this.updatedDate = b.updatedDate;

|

||||

this.evaluateRunningDate = b.evaluateRunningDate;

|

||||

}

|

||||

@@ -141,7 +137,6 @@ public class Trigger extends TriggerContext {

|

||||

.date(trigger.getDate())

|

||||

.nextExecutionDate(trigger.getNextExecutionDate())

|

||||

.executionId(execution.getId())

|

||||

.executionCurrentState(execution.getState().getCurrent())

|

||||

.updatedDate(Instant.now())

|

||||

.backfill(trigger.getBackfill())

|

||||

.stopAfter(trigger.getStopAfter())

|

||||

|

||||

@@ -11,6 +11,7 @@ import io.kestra.core.services.KVStoreService;

|

||||

import io.kestra.core.storages.Storage;

|

||||

import io.kestra.core.storages.StorageInterface;

|

||||

import io.kestra.core.storages.kv.KVStore;

|

||||

import io.kestra.core.utils.ListUtils;

|

||||

import io.kestra.core.utils.VersionProvider;

|

||||

import io.micronaut.context.ApplicationContext;

|

||||

import io.micronaut.context.annotation.Value;

|

||||

@@ -30,7 +31,6 @@ import java.nio.file.Path;

|

||||

import java.security.GeneralSecurityException;

|

||||

import java.util.*;

|

||||

import java.util.concurrent.atomic.AtomicBoolean;

|

||||

import java.util.function.Supplier;

|

||||

import java.util.stream.Collectors;

|

||||

|

||||

import static io.kestra.core.utils.MapUtils.mergeWithNullableValues;

|

||||

@@ -67,6 +67,7 @@ public class DefaultRunContext extends RunContext {

|

||||

private String triggerExecutionId;

|

||||

private Storage storage;

|

||||

private Map<String, Object> pluginConfiguration;

|

||||

private List<String> secretInputs;

|

||||

|

||||

private final AtomicBoolean isInitialized = new AtomicBoolean(false);

|

||||

|

||||

@@ -98,6 +99,15 @@ public class DefaultRunContext extends RunContext {

|

||||

return variables;

|

||||

}

|

||||

|

||||

/**

|

||||

* {@inheritDoc}

|

||||

*/

|

||||

@Override

|

||||

@JsonInclude

|

||||

public List<String> getSecretInputs() {

|

||||

return secretInputs;

|

||||

}

|

||||

|

||||

@JsonIgnore

|

||||

public ApplicationContext getApplicationContext() {

|

||||

return applicationContext;

|

||||

@@ -123,6 +133,17 @@ public class DefaultRunContext extends RunContext {

|

||||

|

||||

void setLogger(final RunContextLogger logger) {

|

||||

this.logger = logger;

|

||||

|

||||

// this is used when a run context is re-hydrated so we need to add again the secrets from the inputs

|

||||

if (!ListUtils.isEmpty(secretInputs) && getVariables().containsKey("inputs")) {

|

||||

Map<String, Object> inputs = (Map<String, Object>) getVariables().get("inputs");

|

||||

for (String secretInput : secretInputs) {

|

||||

String secret = (String) inputs.get(secretInput);

|

||||

if (secret != null) {

|

||||

logger.usedSecret(secret);

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

void setPluginConfiguration(final Map<String, Object> pluginConfiguration) {

|

||||

@@ -179,7 +200,7 @@ public class DefaultRunContext extends RunContext {

|

||||

@Override

|

||||

@SuppressWarnings("unchecked")

|

||||

public String render(String inline, Map<String, Object> variables) throws IllegalVariableEvaluationException {

|

||||

return variableRenderer.render(inline, mergeWithNullableValues(this.variables, variables));

|

||||

return variableRenderer.render(inline, mergeWithNullableValues(this.variables, decryptVariables(variables)));

|

||||

}

|

||||

|

||||

/**

|

||||

@@ -196,7 +217,7 @@ public class DefaultRunContext extends RunContext {

|

||||

@Override

|

||||

@SuppressWarnings("unchecked")

|

||||

public List<String> render(List<String> inline, Map<String, Object> variables) throws IllegalVariableEvaluationException {

|

||||

return variableRenderer.render(inline, mergeWithNullableValues(this.variables, variables));

|

||||

return variableRenderer.render(inline, mergeWithNullableValues(this.variables, decryptVariables(variables)));

|

||||

}

|

||||

|

||||

/**

|

||||

@@ -213,7 +234,7 @@ public class DefaultRunContext extends RunContext {

|

||||

@Override

|

||||

@SuppressWarnings("unchecked")

|

||||

public Set<String> render(Set<String> inline, Map<String, Object> variables) throws IllegalVariableEvaluationException {

|

||||

return variableRenderer.render(inline, mergeWithNullableValues(this.variables, variables));

|

||||

return variableRenderer.render(inline, mergeWithNullableValues(this.variables, decryptVariables(variables)));

|

||||

}

|

||||

|

||||

@Override

|

||||

@@ -224,7 +245,7 @@ public class DefaultRunContext extends RunContext {

|

||||

@Override

|

||||

@SuppressWarnings("unchecked")

|

||||

public Map<String, Object> render(Map<String, Object> inline, Map<String, Object> variables) throws IllegalVariableEvaluationException {

|

||||

return variableRenderer.render(inline, mergeWithNullableValues(this.variables, variables));

|

||||

return variableRenderer.render(inline, mergeWithNullableValues(this.variables, decryptVariables(variables)));

|

||||

}

|

||||

|

||||

@Override

|

||||

@@ -239,7 +260,7 @@ public class DefaultRunContext extends RunContext {

|

||||

return null;

|

||||

}

|

||||

|

||||

Map<String, Object> allVariables = mergeWithNullableValues(this.variables, variables);

|

||||

Map<String, Object> allVariables = mergeWithNullableValues(this.variables, decryptVariables(variables));

|

||||

return inline

|

||||

.entrySet()

|

||||

.stream()

|

||||

@@ -350,6 +371,14 @@ public class DefaultRunContext extends RunContext {

|

||||

return this;

|

||||

}

|

||||

|

||||

private Map<String, Object> decryptVariables(Map<String, Object> variables) {

|

||||

if (secretKey.isPresent()) {

|

||||

final Secret secret = new Secret(secretKey, logger);

|

||||

return secret.decrypt(variables);

|

||||

}

|

||||

return variables;

|

||||

}

|

||||

|

||||

@SuppressWarnings("unchecked")

|

||||

private Map<String, String> metricsTags() {

|

||||

ImmutableMap.Builder<String, String> builder = ImmutableMap.builder();

|

||||

@@ -488,6 +517,7 @@ public class DefaultRunContext extends RunContext {

|

||||

private String triggerExecutionId;

|

||||

private RunContextLogger logger;

|

||||

private KVStoreService kvStoreService;

|

||||

private List<String> secretInputs;

|

||||

|

||||

/**

|

||||

* Builds the new {@link DefaultRunContext} object.

|

||||

@@ -507,6 +537,7 @@ public class DefaultRunContext extends RunContext {

|

||||

context.storage = storage;

|

||||

context.triggerExecutionId = triggerExecutionId;

|

||||

context.kvStoreService = kvStoreService;

|

||||

context.secretInputs = secretInputs;

|

||||

return context;

|

||||

}

|

||||

}

|

||||

|

||||

@@ -325,9 +325,7 @@ public class ExecutorService {

|

||||

);

|

||||

|

||||

if (!nexts.isEmpty()) {

|

||||

return nexts.stream()

|

||||

.map(throwFunction(NextTaskRun::getTaskRun))

|

||||

.toList();

|

||||

return saveFlowableOutput(nexts, executor);

|

||||

}

|

||||

} catch (Exception e) {

|

||||

log.warn("Unable to resolve the next tasks to run", e);

|

||||

@@ -437,7 +435,6 @@ public class ExecutorService {

|

||||

}

|

||||

|

||||

return executor.withTaskRun(

|

||||

// TODO - saveFlowableOutput seems to be only useful for Template

|

||||

this.saveFlowableOutput(nextTaskRuns, executor),

|

||||

"handleNext"

|

||||

);

|

||||

@@ -748,7 +745,8 @@ public class ExecutorService {

|

||||

.map(WorkerGroup::getKey)

|

||||

.orElse(null);

|

||||

// Check if the worker group exist

|

||||

if (workerGroupExecutorInterface.isWorkerGroupExistForKey(workerGroup)) {

|

||||

String tenantId = executor.getFlow().getTenantId();

|

||||

if (workerGroupExecutorInterface.isWorkerGroupExistForKey(workerGroup, tenantId)) {

|

||||

// Check whether at-least one worker is available

|

||||

if (workerGroupExecutorInterface.isWorkerGroupAvailableForKey(workerGroup)) {

|

||||

return workerTask;

|

||||

|

||||

@@ -5,6 +5,7 @@ import com.google.common.annotations.VisibleForTesting;

|

||||

import com.google.common.collect.ImmutableMap;

|

||||

import io.kestra.core.encryption.EncryptionService;

|

||||

import io.kestra.core.exceptions.IllegalVariableEvaluationException;

|

||||

import io.kestra.core.exceptions.KestraRuntimeException;

|

||||

import io.kestra.core.models.executions.Execution;

|

||||

import io.kestra.core.models.flows.Data;

|

||||

import io.kestra.core.models.flows.DependsOn;

|

||||

@@ -18,6 +19,7 @@ import io.kestra.core.models.flows.input.ItemTypeInterface;

|

||||

import io.kestra.core.models.tasks.common.EncryptedString;

|

||||

import io.kestra.core.models.validations.ManualConstraintViolation;

|

||||

import io.kestra.core.serializers.JacksonMapper;

|

||||

import io.kestra.core.storages.StorageContext;

|

||||

import io.kestra.core.storages.StorageInterface;

|

||||

import io.kestra.core.utils.ListUtils;

|

||||

import io.kestra.core.utils.MapUtils;

|

||||

@@ -33,6 +35,7 @@ import org.reactivestreams.Publisher;

|

||||

import org.slf4j.Logger;

|

||||

import org.slf4j.LoggerFactory;

|

||||

import reactor.core.publisher.Flux;

|

||||

import reactor.core.publisher.Mono;

|

||||

import reactor.core.scheduler.Schedulers;

|

||||

|

||||

import java.io.File;

|

||||

@@ -90,31 +93,14 @@ public class FlowInputOutput {

|

||||

* @param inputs The Flow's inputs.

|

||||

* @param execution The Execution.

|

||||

* @param data The Execution's inputs data.

|

||||

* @param deleteInputsFromStorage Specifies whether inputs stored on internal storage should be deleted before returning.

|

||||

* @return The list of {@link InputAndValue}.

|

||||

*/

|

||||

public List<InputAndValue> validateExecutionInputs(final List<Input<?>> inputs,

|

||||

public Mono<List<InputAndValue>> validateExecutionInputs(final List<Input<?>> inputs,

|

||||

final Execution execution,

|

||||

final Publisher<CompletedPart> data,

|

||||

final boolean deleteInputsFromStorage) throws IOException {

|

||||

if (ListUtils.isEmpty(inputs)) return Collections.emptyList();

|

||||

final Publisher<CompletedPart> data) {

|

||||

if (ListUtils.isEmpty(inputs)) return Mono.just(Collections.emptyList());

|

||||

|

||||

Map<String, ?> dataByInputId = readData(inputs, execution, data);

|

||||

|

||||

List<InputAndValue> values = this.resolveInputs(inputs, execution, dataByInputId);

|

||||

if (deleteInputsFromStorage) {

|

||||

values.stream()

|

||||

.filter(it -> it.input() instanceof FileInput && Objects.nonNull(it.value()))

|

||||

.forEach(it -> {

|

||||

try {

|

||||

URI uri = URI.create(it.value().toString());

|

||||

storageInterface.delete(execution.getTenantId(), uri);

|

||||

} catch (IllegalArgumentException | IOException e) {

|

||||

log.debug("Failed to remove execution input after validation [{}]", it.value(), e);

|

||||

}

|

||||

});

|

||||

}

|

||||

return values;

|

||||

return readData(inputs, execution, data, false).map(inputData -> resolveInputs(inputs, execution, inputData));

|

||||

}

|

||||

|

||||

/**

|

||||

@@ -125,9 +111,9 @@ public class FlowInputOutput {

|

||||

* @param data The Execution's inputs data.

|

||||

* @return The Map of typed inputs.

|

||||

*/

|

||||

public Map<String, Object> readExecutionInputs(final Flow flow,

|

||||

public Mono<Map<String, Object>> readExecutionInputs(final Flow flow,

|

||||

final Execution execution,

|

||||

final Publisher<CompletedPart> data) throws IOException {

|

||||

final Publisher<CompletedPart> data) {

|

||||

return this.readExecutionInputs(flow.getInputs(), execution, data);

|

||||

}

|

||||

|

||||

@@ -139,39 +125,58 @@ public class FlowInputOutput {

|

||||

* @param data The Execution's inputs data.

|

||||

* @return The Map of typed inputs.

|

||||

*/

|

||||

public Map<String, Object> readExecutionInputs(final List<Input<?>> inputs,

|

||||

final Execution execution,

|

||||

final Publisher<CompletedPart> data) throws IOException {

|

||||

return this.readExecutionInputs(inputs, execution, readData(inputs, execution, data));

|

||||

public Mono<Map<String, Object>> readExecutionInputs(final List<Input<?>> inputs,

|

||||

final Execution execution,

|

||||

final Publisher<CompletedPart> data) {

|

||||

return readData(inputs, execution, data, true).map(inputData -> this.readExecutionInputs(inputs, execution, inputData));

|

||||

}

|

||||

|

||||

private Map<String, ?> readData(List<Input<?>> inputs, Execution execution, Publisher<CompletedPart> data) throws IOException {

|

||||

private Mono<Map<String, Object>> readData(List<Input<?>> inputs, Execution execution, Publisher<CompletedPart> data, boolean uploadFiles) {

|

||||

return Flux.from(data)

|

||||

.subscribeOn(Schedulers.boundedElastic())

|

||||

.map(throwFunction(input -> {

|

||||

.publishOn(Schedulers.boundedElastic())

|

||||

.<AbstractMap.SimpleEntry<String, String>>handle((input, sink) -> {

|

||||

if (input instanceof CompletedFileUpload fileUpload) {

|

||||

final String fileExtension = FileInput.findFileInputExtension(inputs, fileUpload.getFilename());

|

||||

File tempFile = File.createTempFile(fileUpload.getFilename() + "_", fileExtension);

|

||||

try (var inputStream = fileUpload.getInputStream();

|

||||

var outputStream = new FileOutputStream(tempFile)) {

|

||||

long transferredBytes = inputStream.transferTo(outputStream);

|

||||

if (transferredBytes == 0) {

|

||||

throw new RuntimeException("Can't upload file: " + fileUpload.getFilename());

|

||||

}

|

||||

if (!uploadFiles) {

|

||||

final String fileExtension = FileInput.findFileInputExtension(inputs, fileUpload.getFilename());

|

||||

URI from = URI.create("kestra://" + StorageContext

|

||||

.forInput(execution, fileUpload.getFilename(), fileUpload.getFilename() + fileExtension)

|

||||

.getContextStorageURI()

|

||||

);

|

||||

fileUpload.discard();

|

||||

sink.next(new AbstractMap.SimpleEntry<>(fileUpload.getFilename(), from.toString()));

|

||||

} else {

|

||||

try {

|

||||

final String fileExtension = FileInput.findFileInputExtension(inputs, fileUpload.getFilename());

|

||||

|

||||

URI from = storageInterface.from(execution, fileUpload.getFilename(), tempFile);

|

||||

return new AbstractMap.SimpleEntry<>(fileUpload.getFilename(), from.toString());

|

||||

} finally {

|

||||

if (!tempFile.delete()) {

|

||||

tempFile.deleteOnExit();

|

||||

File tempFile = File.createTempFile(fileUpload.getFilename() + "_", fileExtension);

|

||||

try (var inputStream = fileUpload.getInputStream();

|

||||

var outputStream = new FileOutputStream(tempFile)) {

|

||||

long transferredBytes = inputStream.transferTo(outputStream);

|

||||

if (transferredBytes == 0) {

|

||||

sink.error(new KestraRuntimeException("Can't upload file: " + fileUpload.getFilename()));

|

||||

return;

|

||||

}

|

||||

URI from = storageInterface.from(execution, fileUpload.getFilename(), tempFile);

|

||||

sink.next(new AbstractMap.SimpleEntry<>(fileUpload.getFilename(), from.toString()));

|

||||

} finally {

|

||||

if (!tempFile.delete()) {

|

||||

tempFile.deleteOnExit();

|

||||

}

|

||||

}

|

||||

} catch (IOException e) {

|

||||

fileUpload.discard();

|

||||

sink.error(e);

|

||||

}

|

||||

}

|

||||

} else {

|

||||

return new AbstractMap.SimpleEntry<>(input.getName(), new String(input.getBytes()));

|

||||

try {

|

||||

sink.next(new AbstractMap.SimpleEntry<>(input.getName(), new String(input.getBytes())));

|

||||

} catch (IOException e) {

|

||||

sink.error(e);

|

||||

}

|

||||

}

|

||||

}))

|

||||

.collectMap(AbstractMap.SimpleEntry::getKey, AbstractMap.SimpleEntry::getValue)

|

||||

.block();

|

||||

})

|

||||

.collectMap(AbstractMap.SimpleEntry::getKey, AbstractMap.SimpleEntry::getValue);

|

||||

}

|

||||

|

||||

/**

|

||||

@@ -404,7 +409,8 @@ public class FlowInputOutput {

|

||||

yield EncryptionService.encrypt(secretKey.get(), (String) current);

|

||||

}

|

||||

case INT -> current instanceof Integer ? current : Integer.valueOf((String) current);

|

||||

case FLOAT -> current instanceof Float ? current : Float.valueOf((String) current);

|

||||

// Assuming that after the render we must have a double/int, so we can safely use its toString representation

|

||||

case FLOAT -> current instanceof Float ? current : Float.valueOf(current.toString());

|

||||

case BOOLEAN -> current instanceof Boolean ? current : Boolean.valueOf((String) current);

|

||||

case DATETIME -> Instant.parse(((String) current));

|

||||

case DATE -> LocalDate.parse(((String) current));

|

||||

|

||||

@@ -47,6 +47,12 @@ public abstract class RunContext {

|

||||

@JsonInclude

|

||||

public abstract Map<String, Object> getVariables();

|

||||

|

||||

/**

|

||||

* Returns the list of inputs of type SECRET.

|

||||

*/

|

||||

@JsonInclude

|

||||

public abstract List<String> getSecretInputs();

|

||||

|

||||

public abstract String render(String inline) throws IllegalVariableEvaluationException;

|

||||

|

||||

public abstract Object renderTyped(String inline) throws IllegalVariableEvaluationException;

|

||||

|

||||

@@ -5,6 +5,7 @@ import io.kestra.core.metrics.MetricRegistry;

|

||||

import io.kestra.core.models.executions.Execution;

|

||||

import io.kestra.core.models.executions.TaskRun;

|

||||

import io.kestra.core.models.flows.Flow;

|

||||

import io.kestra.core.models.flows.Type;

|

||||

import io.kestra.core.models.tasks.Task;

|

||||

import io.kestra.core.models.triggers.AbstractTrigger;

|

||||

import io.kestra.core.plugins.PluginConfigurations;

|

||||

@@ -15,12 +16,12 @@ import io.kestra.core.storages.StorageContext;

|

||||

import io.kestra.core.storages.StorageInterface;

|

||||

import io.micronaut.context.ApplicationContext;

|

||||

import io.micronaut.context.annotation.Value;

|

||||

import jakarta.annotation.Nullable;

|

||||

import jakarta.inject.Inject;

|

||||

import jakarta.inject.Singleton;

|

||||

import jakarta.validation.constraints.NotNull;

|

||||

|

||||

import java.net.URI;

|

||||

import java.util.Collections;

|

||||

import java.util.List;

|

||||

import java.util.Map;

|

||||

import java.util.Optional;

|

||||

import java.util.function.Function;

|

||||

@@ -83,8 +84,10 @@ public class RunContextFactory {

|

||||

.withFlow(flow)

|

||||

.withExecution(execution)

|

||||

.withDecryptVariables(true)

|

||||

.withSecretInputs(secretInputsFromFlow(flow))

|

||||

)

|

||||

.build(runContextLogger))

|

||||

.withSecretInputs(secretInputsFromFlow(flow))

|

||||

.build();

|

||||

}

|

||||

|

||||

@@ -107,8 +110,10 @@ public class RunContextFactory {

|

||||

.withExecution(execution)

|

||||

.withTaskRun(taskRun)

|

||||

.withDecryptVariables(decryptVariables)

|

||||

.withSecretInputs(secretInputsFromFlow(flow))

|

||||

.build(runContextLogger))

|

||||

.withKvStoreService(kvStoreService)

|

||||

.withSecretInputs(secretInputsFromFlow(flow))

|

||||

.build();

|

||||

}

|

||||

|

||||

@@ -122,8 +127,10 @@ public class RunContextFactory {

|

||||

.withVariables(newRunVariablesBuilder()

|

||||

.withFlow(flow)

|

||||

.withTrigger(trigger)

|

||||

.withSecretInputs(secretInputsFromFlow(flow))

|

||||

.build(runContextLogger)

|

||||

)

|

||||

.withSecretInputs(secretInputsFromFlow(flow))

|

||||

.build();

|

||||

}

|

||||

|

||||

@@ -135,6 +142,7 @@ public class RunContextFactory {

|

||||

.withLogger(runContextLogger)

|

||||

.withStorage(new InternalStorage(runContextLogger.logger(), StorageContext.forFlow(flow), storageInterface, flowService))

|

||||

.withVariables(variables)

|

||||

.withSecretInputs(secretInputsFromFlow(flow))

|

||||

.build();

|

||||

}

|

||||

|

||||

@@ -177,6 +185,16 @@ public class RunContextFactory {

|

||||

return of(Map.of());

|

||||

}

|

||||

|

||||

private List<String> secretInputsFromFlow(Flow flow) {

|

||||

if (flow == null || flow.getInputs() == null) {

|

||||

return Collections.emptyList();

|

||||

}

|

||||

|

||||

return flow.getInputs().stream()

|

||||

.filter(input -> input.getType() == Type.SECRET)

|

||||

.map(input -> input.getId()).toList();

|

||||

}

|

||||

|

||||

private DefaultRunContext.Builder newBuilder() {

|

||||

return new DefaultRunContext.Builder()

|

||||

// inject mandatory services and config

|

||||

|

||||

@@ -9,6 +9,7 @@ import io.kestra.core.models.flows.State;

|

||||

import io.kestra.core.models.flows.input.SecretInput;

|

||||

import io.kestra.core.models.tasks.Task;

|

||||

import io.kestra.core.models.triggers.AbstractTrigger;

|

||||

import io.kestra.core.utils.ListUtils;

|

||||

import lombok.AllArgsConstructor;

|

||||

import lombok.With;

|

||||

|

||||

@@ -125,6 +126,8 @@ public final class RunVariables {

|

||||

|

||||

Builder withGlobals(Map<?, ?> globals);

|

||||

|

||||

Builder withSecretInputs(List<String> secretInputs);

|

||||

|

||||

/**

|

||||

* Builds the immutable map of run variables.

|

||||

*

|

||||

@@ -152,6 +155,7 @@ public final class RunVariables {

|

||||

protected Map<String, ?> envs;

|

||||

protected Map<?, ?> globals;

|

||||

private final Optional<String> secretKey;

|

||||

private List<String> secretInputs;

|

||||

|

||||

public DefaultBuilder() {

|

||||

this(Optional.empty());

|

||||

@@ -252,6 +256,16 @@ public final class RunVariables {

|

||||

|

||||

if (!inputs.isEmpty()) {

|

||||

builder.put("inputs", inputs);

|

||||

|

||||

// if a secret input is used, add it to the list of secrets to mask on the logger

|

||||

if (logger != null && !ListUtils.isEmpty(secretInputs)) {

|

||||

for (String secretInput : secretInputs) {

|

||||

String secret = (String) inputs.get(secretInput);

|

||||

if (secret != null) {

|

||||

logger.usedSecret(secret);

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|